Berkeley Lab Scientists Build Software Framework for ATLAS Collaboration

May 17, 2010

By Linda Vu

Contact: cscomms@lbl.gov

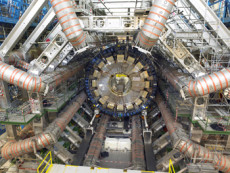

Credit: the ATLAS Experiment at CERN The view of the calorimeter in its final position surrounded with eight toroids in the cavern of ATLAS.

Three thousand researchers in 37 countries are searching for the origins of mass, new dimensions of space and undiscovered forces of physics in the head-on collisions of high-energy protons at the Large Hadron Collider's ATLAS experiment. When ATLAS is turned-on, its detectors record about 400 collision events per second from a variety of perspectives, a rate equivalent to filling 27 compact disks per minute.

In order to sift out signs of new physics in this torrent of data, thousands of researchers must be able to process this information and collaborate on results in real time. To facilitate this distributed workflow, they are relying on a software framework called Athena, which was developed by an international team of scientists led by Paolo Calafiura of the Advanced Computing for Science Department in the Lawrence Berkeley National Laboratory's (Berkeley Lab) Computational Research Division.

"When developers plug their codes into the Athena framework, they get the most common functionality and communication among the different components of the experiment," says Calafiura, who is also the Chief Software Architect for ATLAS.

He notes that the Athena software is essentially the "plumbing" for the international ATLAS collaboration. It allows scientists to focus on developing tools for analysis and actually analyzing data, instead of worrying about infrastructure issues like the compatibility of files. Researchers simply plug their codes into the framework, and Athena takes care of basic functions like coordinating the execution of applications and applying a common application-programming interface (API) so that collaborators can retrieve the same files.

"If you are a researcher that would like to computationally reconstruct the track left by high-energy muon particles while they traverse six different ATLAS detectors, you can use Athena StoreGate library to access the data coming from each detector and later to post the results of your reconstruction code for others to use," says Calafiura. "Once an object is posted to StoreGate, the library manages it according to preset policies and provides an API so that collaborators can easily share the data."

Athena is built on top of the Gaudi software framework that was originally developed for the LHCb experiment, which specifically looks at physics phenomena involving fundamental particles called b-mesons.

"When we began thinking about the software framework for ATLAS, we were very impressed by the Gaudi framework that was developed for LHCb. The architecture was very well designed, and it was simple to use. Rather than reinvent the wheel, we decided to work with what existed and plug in ATLAS-specific enhancements," says Calafiura. He notes that the Gaudi architecture is now a common kernel of software used by many experiments around the world and is co-developed by scientists mainly from ATLAS and LHCb.

Athena and Gaudi were developed in a close collaboration between computer scientists and physicists. Keith Jackson, Charles Leggett, and Wim Lavrijsen of the ACS department worked with Calafiura to develop the Athena framework. The team worked closely with David Quarrie, Mous Tatarkhanov and Yushu Yao, of the Berkeley Lab's Physics Division.

Berkeley Lab and LHC Computing

Beyond developing software for ATLAS, the Berkeley Lab will also contribute computing and network resources to CERN's LHC—the world's largest particle accelerator. On March 30, the experiment achieved record-breaking seven trillion electron volt (7 TeV) proton collisions and opened a new realm of high-energy physics.

The LHC contains six major detector experiments, and experts predict that 15 petabytes of data will flow from the LHC per year for the next 10 to 15 years.That is enough information per year to create a tower of CDs more than twice as high as Mount Everest. Because CERN only has enough computational power to handle about 20 percent of this data, the workload is divided up and distributed to hundreds of universities and institutions around the globe via the LHC Computing Grid. The United States contributes 23 percent of the worldwide computing capacity for the ATLAS experiment and more than 30 percent of the computing power for another LHC experiment called CMS.

Raw data from all six LHC experiments are initially processed, archived and divided for distribution to 12 Tier-1 sites around the globe at CERN's onsite data center. In the US, the Fermi and Brookhaven National Laboratories are Tier-1 facilities that receive large portions of CMS and ATLAS data from CERN for another round of processing and archiving. This data is eventually carried to Tier-2 facilities, which primarily consist of universities and research institutions across the nation, for analysis. The Berkeley Lab's National Energy Research Scientific Computing Center (NERSC) is one of eight Tier-2 centers in the western US that will provide computing and storage resources for the LHC's ATLAS and ALICE experiments data analysis.

As each processing job completes, the sites will push data back up the tier system. In the United States, all data traveling between Tier-1 and Tier-2 DOE sites will be carried by the Energy Sciences Network (ESnet), which is managed by Berkeley Lab's Computational Research Division. ESnet is comprised of two networks—an IP network that carries daily traffic to support lab operations, general science communication and science with relatively small data requirements, and a circuit-oriented Science Data Network (SDN) to transfer massive data sets like those from the LHC. Using the On-Demand Secure Circuit and Advanced Reservation System (OSCARS), researchers can reserve bandwidth on the SDN to guarantee that data is delivered to their collaborators with a certain time-frame.

For more information about Berkeley Lab contributions to LHC computing, please read:

Global Reach: NERSC Helps Manage and Analyze LHC Data

ESnet4 Helps Researchers Seeking the Origins of Matter

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube