Earth System Grid Federation: A Modern Day ‘Silk Road’ for Climate Data

ESnet Engineers Clear the Path for an Unprecedented Understanding of Climate and Environmental Science

July 30, 2012

Linda Vu, lvu@lbl.gov, +1 510 495 2402

Centuries ago, civilizations grew and flourished along trade routes like the Silk Road, where diverse populations shared knowledge and goods. Back then, information traveling on these networks by horse or camel took months, often years, to reach its destination.

Today, knowledge travels a lot faster—as packets move at light speed over networks of fiber optic cables that circle the globe. Although the methods of travel have changed, the effects of these connections remain the same: communities thrive by sharing information.

This is especially true of the international climate research community where approximately 25,000 researchers from 2,700 sites in 19 different countries on six continents are sharing data and tools via the Earth System Grid Federation (ESGF). Through this collaboration, scientists are gaining an unprecedented understanding of climate change, extreme weather events and environmental science. In 2007, a Nobel Peace prize was even awarded to United Nations climate change report produced with data hosted by an ESGF-collaboration.

“In the case of ESGF, most data must traverse four or five network domains before reaching its destination,” says Eli Dart, a network engineer at the Department of Energy’s Energy Sciences Network (ESnet), which is based at the Lawrence Berkeley National Laboratory (Berkeley Lab). “In order for science to be successful, especially data-intensive science, data mobility across networks owned and operated by different organizations (often in different countries) has to be routine and efficient. This means that scientists have to get data where they need it on human time scales—minutes, hours, or days.”

However, when data crosses four or five networks, as climate data does on ESGF, it can be difficult to locate and diagnose the bottlenecks that keep data from reaching its destination in a timely manner, and then get the responsible parties to fix the issues. Fortunately, this is where ESnet engineers like Dart excel.

To ensure that climate information travels reliably from scientist to scientist, making it from “end-to-end” on ESGF, ESnet engineers worked with the climate community to deploy perfSONAR network-monitoring infrastructure. These tools, co-developed by ESnet together with Internet2 and several international collaborators, help network engineers identify performance problems across multiple networks. Once a problem is identified, ESnet engineers work with staff from other institutions to address the root cause and ensure that data can flow at the speed required for science.

“Scientific collaborations like the ESGF are primarily composed of scientific experts such as atmospheric scientists, climatologists and meteorologists; it is their job to do research, and it is unreasonable to expect them to spend their spare time becoming network experts,” says Dart. “It is our job to partner with these collaborations to help develop network strategies and resolve any issues as they arise.”

A Portal for Climate and Earth Sciences

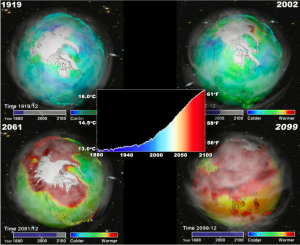

The surface of the Earth is warming. Four maps of the northern hemisphere demonstrate the trend, with average surface temperature for: top left, 1919; top right, 2002; bottom left, 2061; and bottom right, 2099. Center, the global average, as a function of time, is shown. The computations were based on observed temperature (pre-2000) and a climate model assuming a continued high rate of greenhouse gas emission. (Image courtesy of Dean Williams, LLNL)

This essentially means that instead of storing all climate data in one place, each modeling center in the federation archives its own data. LLNL sends each site a software stack, which allows them to publish their data to an online portal. Similar to online shopping, researchers in the federation can query available data for what they need, add it to a “shopping cart,” and then download it when they are ready. Replicas of the most popular data sets are also archived at LLNL for download and backup.

According to Williams, there are currently 14 international research projects using the ESGF infrastructure to globally manage and distribute their data. One of these projects is the Coupled Model Intercomparison Project (CMIP), where an international collaboration of researchers performs a common set of experiments with all of the major coupled atmosphere-ocean general circulation models. These models let researchers simulate how global climate changes as the amount of carbon dioxide increases in the atmosphere. The results of these simulations will be used in the next United Nations climate change assessment report, which will be released in 2013.

“For the previous assessment report, LLNL mailed multiple terabyte disks to all of the CMIP modeling centers, they transferred their data to the disks, mailed them back to us, and then we uploaded all of this data onto servers at LLNL,” says Williams. “We collected about 35 terabytes of data for this LLNL archive, and then allowed researchers to access it on the ESGF.”

Before the previous report was even published, it was clear that the then-current approach would no longer be sustainable or scalable as the size of the datasets would outpace the capabilities of traditional portable media. As computing and networking technologies improved, the amount of data generated by the climate community skyrocketed. So with funding from the DOE Offices of Advance Scientific Computing Research and Biological and Environmental Research, Williams worked with colleagues at multiple national and international institutions, including four DOE National Laboratories, to develop the system used today.

By 2014, Williams predicts that the CMIP project alone will have archived 3.5 petabytes of data across the federation with 2 petabytes replicated at LLNL—100 times more information than they had seven years ago. In anticipation of this and the future deluge of climate data, Williams is currently working with researchers in the Berkeley Lab’s Scientific Data Management Group and ESnet engineers to prepare ESGF for 100 Gbps data transfers.

“Networks are crucial to our work, and ESnet engineers like Eli have been extremely helpful in making sure that the connections between ESGF sites are running optimally,” says Williams. “We will continue to rely heavily on the expertise of ESnet engineers as our community develops a strategy for handling even bigger climate data sets in the future.”

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube