VISUALIZING THE FUTURE OF SCIENTIFIC DISCOVERY

Scientists demonstrate tools for analyzing massive datasets

June 11, 2009

By Jon Bashor

Contact: cscomms@lbl.gov

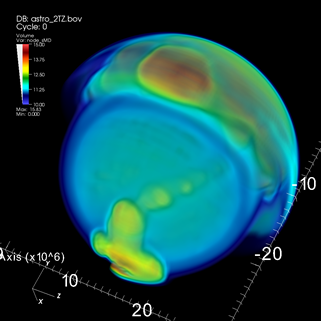

A SUPERNOVA'S VOLUME: This volume rendering of supernova simulation data was generated by running the VisIt application on 32,000 processors on Franklin, a Cray XT4 supercomputer at NERSC.

As computational scientists are confronted with increasingly massive datasets from supercomputing simulations and experiments, one of the biggest challenges is having the right tools to gain scientific insight from the data.

A team of Department of Energy (DOE) researchers recently ran a series of experiments to determine whether VisIt, a leading scientific visualization application, is up to the challenge. Running on some of the world's most powerful supercomputers, VisIt achieved unprecedented levels of performance in these highly parallel environments, tackling data sets far larger than scientists are currently producing.

The team ran VisIt using 8,000 to 32,000 processing cores to tackle datasets ranging from 500 billion to 2 trillion zones, or grid points. The project was a collaboration among leading visualization researchers from Lawrence Berkeley National Laboratory (Berkeley Lab), Lawrence Livermore National Laboratory (LLNL) and Oak Ridge National Laboratory (ORNL).

Specifically, the team verified that VisIt could take advantage of the growing number of cores powering the world's most advanced supercomputers, using them to tackle unprecedentedly large problems. Scientists confronted with massive datasets rely on data analysis and visualization software such as VisIt to “get the science out of the data,” as one researcher said. VisIt, a parallel visualization and analysis tool that won an R&D 100 award in 2005, was developed at LLNL for the National Nuclear Security Administration.

When DOE established the Visualization and Analytics Center for Enabling Technologies (VACET) in 2006, the center joined the VisIt development effort, making further extensions for use on the large, complex datasets emerging from the SciDAC program. VACET is part of DOE's Scientific Discovery through Advanced Computing (SciDAC) program and includes researchers from the University of California at Davis and the University of Utah, as well as Berkeley Lab, LLNL and ORNL.

The VACET team conducted the recent capability experiments in response to its mission to provide production-quality, parallel-capable visual data analysis software. These tests were a significant milestone for DOE's visualization efforts, providing an important new capability for the larger scientific research communities.

“The results show that visualization research and development efforts have produced technology that is today capable of ingesting and processing tomorrow’s datasets,” said Berkeley Lab’s E. Wes Bethel, who is co-leader of VACET. “These results are the largest-ever problem sizes and the largest degree of concurrency ever attempted within the DOE visualization research community.”

Other team members are Mark Howison and Prabhat from Berkeley Lab; Hank Childs, who began working on the project while at LLNL and has now joined Berkeley Lab; and Dave Pugmire and Sean Ahern from ORNL. All are members of VACETl.

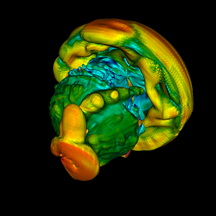

SIMULATING SUPERNOVA: This isosurface rendering of the same data was created by running VisIt on JaguarPF, a Cray XT5 supercomputer at the Oak Ridge Leadership Computing Facility at ORNL.

The VACET team ran the experiments in April and May on several world-class supercomputers:

- Franklin, a 38,128-core Cray XT4 located at the National Energy Research Scientific Computing Center at Berkeley Lab;

- JaguarPF, a 149,504-core Cray XT5 at the Oak Ridge Leadership Computing Facility at ORNL;

- Ranger, a 62,976-core x86_64 Linux system at the Texas Advanced Computing Center at the University of Texas at Austin;

- Purple, a 12,288-core IBM Power5 at LLNL; and

- Juno, an 18,432-core x86_64 Linux system at LLNL.

To run these tests, the VACET team started with data from an astrophysics simulation, and then increased it to create a sample scientific dataset at the desired dimensions. The team used this approach because the data sizes reflect tomorrow's problem sizes, and because the primary objective of these experiments is to better understand problems and limitations that might be encountered at extreme levels of concurrency and data size.

The test runs created three-dimensional grids ranging from 512 x 512 x 512 “zones” or sample points up to approximately 10,000 x 10,000 x 10,000 samples for 1 trillion zones and approximately 12,500 x 12,500 x 12,500 to achieve 2 trillion grid points.

“This level of grid resolution, while uncommon today, is anticipated to be commonplace in the near future,” said Ahern. “A primary objective for our SciDAC Center is to be well prepared to tackle tomorrow's scientific data understanding challenges.”

The experiments ran VisIt in parallel on 8,000 to 32,000 cores, depending on the size of the system. Data was loaded in parallel, with the application performing two common visualization tasks—isosurfacing and volume rendering—and producing an image. From these experiments, the team collected performance data that will help them both to identify potential bottlenecks and to optimize VisIt before the next major version is released for general production use at supercomputing centers later this year.

Another purpose of these runs was to prepare for establishing VisIt's credentials as a “Joule code,” or a code that has demonstrated scalability at a large number of cores. DOE's Office of Advanced Scientific Computing Research (ASCR) is establishing a set of such codes to serve as a metric for tracking code performance and scalability as supercomputers are built with tens and hundreds of thousands of processor cores. VisIt is the first and only visual data analysis code that is part of the ASCR Joule metric.

VisIt is currently running on six of the world's top eight supercomputers, and the software has been downloaded by more than 100,000 users.

Get more information about VisIt and VACET.

Acknowledgments

* DOE's Scientific Discovery through Advanced Computing Program (SciDAC)

* Kathy Yelick, Francesca Verdier, and Howard Walter. National Energy Research Scientific Computing Center (NERSC), Berkeley Lab

* Paul Navratil, Kelly Gaither, and Karl Schulz, Texas Advanced Computing Center, University of Texas, Austin

* James Hack, Doug Kothe, Arthur Bland, Ricky Kendall, Oak Ridge Leadership Computing Facility, ORNL.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube