Adaptive Mesh Refinement Algorithms Create Computational Microscope

January 26, 1998

By Jon Bashor

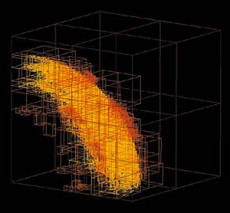

Even for supercomputers, some phenomena are too complex to be modeled. Using a technique called adaptive mesh refinement, researchers break their data down into cells, covering areas of particular interest with a mesh of thousands of segments, which then can be individually analyzed in detail.

NOTE: This archived news story is made available as-is. It may contain references to programs, people, and research activities that are no longer active at Berkeley Lab. It may include links to web pages that no longer exist or refer to documents no longer available.

Computer modeling algorithms that break large problems into small pieces, and then focus computing power on those areas of the most scientific interest are helping scientists better understand such everyday situations as running an internal combustion engine, flying in an airplane or predicting the weather.

The axiom that big problems are much easier to tackle when divided into much smaller tasks is especially true when solving problems in fluid dynamics.

Called adaptive mesh refinement capability, this applied math research by the Lab’s Center for Computational Science and Engineering provides tools for computer modeling to allow researchers to automatically apply their computing resources to the most intriguing problems. The end result is that researchers get better answers at a lower cost.

The system works by taking a problem and dividing it into smaller parts, much like putting a mesh over the subject in question and then looking at each segment. Areas of interest are treated with a finer mesh to give scientists more detailed information. The smaller the piece, the higher the accuracy (Imagine wanting to accurately measure the temperature in an auditorium. Taking eight measurements over two hours would be much less reliable than measuring a thousand points every minute).

However, analyzing more points requires more computing capacity. The adaptive mesh refinement capability allows scientists to focus their existing computer power on a narrower part of the overall problem, rather than requiring bigger, more powerful computers.

In the microsconds after an explosion, the most interesting scientific features are at the edge of the expanding materials. Adaptive mesh refinement (AMR) capabilities automatically track this area (shown by yellow grids above left and center). A 3D image of the explosion is at the far right.

For example, a typical computer modeling program may provide a “big picture” image of how a diesel engine operates. This turbulent process, a strong interplay of chemistry and fluid dynamics, is not well understood, even by the experts who design the engines. Better computer models will allow them to “see” inside the engine and gain a better understanding. But researchers studying ways to make diesel combustion more efficient and less polluting want to look only at the point where fuel is burning. And because the process occurs very quickly, accurate modeling requires that the burn time be divided into thousands of time steps to provide an accurate model.

The computer simulations are then checked by comparing the results with actual experiments. The model can then be tweaked by changing any of various factors, such as temperature, time, chemistry, and then compared again with data from an engine.

Creating workable algorithms to achieve this requires the solving of both mathematical and computer science problems. For example, the computer needs to be programmed to keep track of cells of both fine and coarse data, the interfaces between them and the movement of the cells over time. This requires a whole set of data structures to keep track of the various elements and allocate appropriate portions of the computer architecture to do the job.

Mathematically, everything has to add up. The law of conservation applies, even to computer modeling. In the case of weather modeling, a cloud in a coarse-grain cell has to correspond with the same cloud as it passes through a fine-grained cell. To make the algorithms work properly, mathematical formulas must be written to maintain the balance between the appropriate cells even as the number of data points changes.

The overall task is made easier by the library of existing algorithms. In many cases, portions of these can be plugged in to help solve new problems. As with the problems they address, this allows the computer scientists to focus their efforts on the most challenging aspects of the work at hand.

Adaptive Mesh Refinement Capability

The Center for Computational Science and Engineering has developed Adaptive Mesh Refinement Capability for improved computer modeling of low Mach-number flows in which all the motion is at speeds slower than the speed of sound. This capability allows researchers to model flows for less computational cost (less computer time and memory) and achieve the same accuracy.

This capability, which is being developed with funding from DOE MICS and the DSWA, is being used by Lab scientists to study a broad range of phenomena, from turbulent flow of water through a channel to combustion to atmospheric dispersal.

Lab computer scientists have taken AMR to a new level by writing programs to run on distributed memory supercomputers such as the Cray T3E.

Many everyday situations we take for granted, such as running an internal combustion engine, flying in an airplane or predicting the weather, actually involve very complex problems which scientists are only now beginning to understand, thanks to computer modeling. At Berkeley Lab, computer scientists and mathematicians are developing specialized algorithms for computer modeling of fluid dynamics problems.

The way that CCSE tackles a problem is by first looking at what’s known. In the case of a diesel engine, this would include fuel sprays from injectors, the heating and vaporization of the fuel mixture, ignition and burning, and generation of soot. Then, basic algorithms for studying fluid dynamics are hooked up to models of those processes and the shortcomings identified.

The algorithms work by applying a computer grid, or mesh, to the problem and breaking the overall process into smaller components. This mesh then automatically adapts to the problem being studied and determines the areas of most interest to the scientist. By focusing primarily on a specific area, more computing power can be brought to bear on the problem and more accurate solutions devised.

The end result is that problems are solved more quickly and efficiently.

Although there are different ways of approaching the problem, the Berkeley group uses a technique called structured hierarchical adaptivity. Because supercomputers work on highly structured grids, this approach is a natural for adapting to massive parallel processor computers.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube