The National Energy Research Scientific Computing Center (NERSC) and the Energy Sciences Network (ESnet) located at Lawrence Berkeley National Laboratory (Berkeley Lab) are playing a critical role in facilitating the burgeoning LUX-ZEPLIN (LZ) experiment in its search for dark matter in the universe.

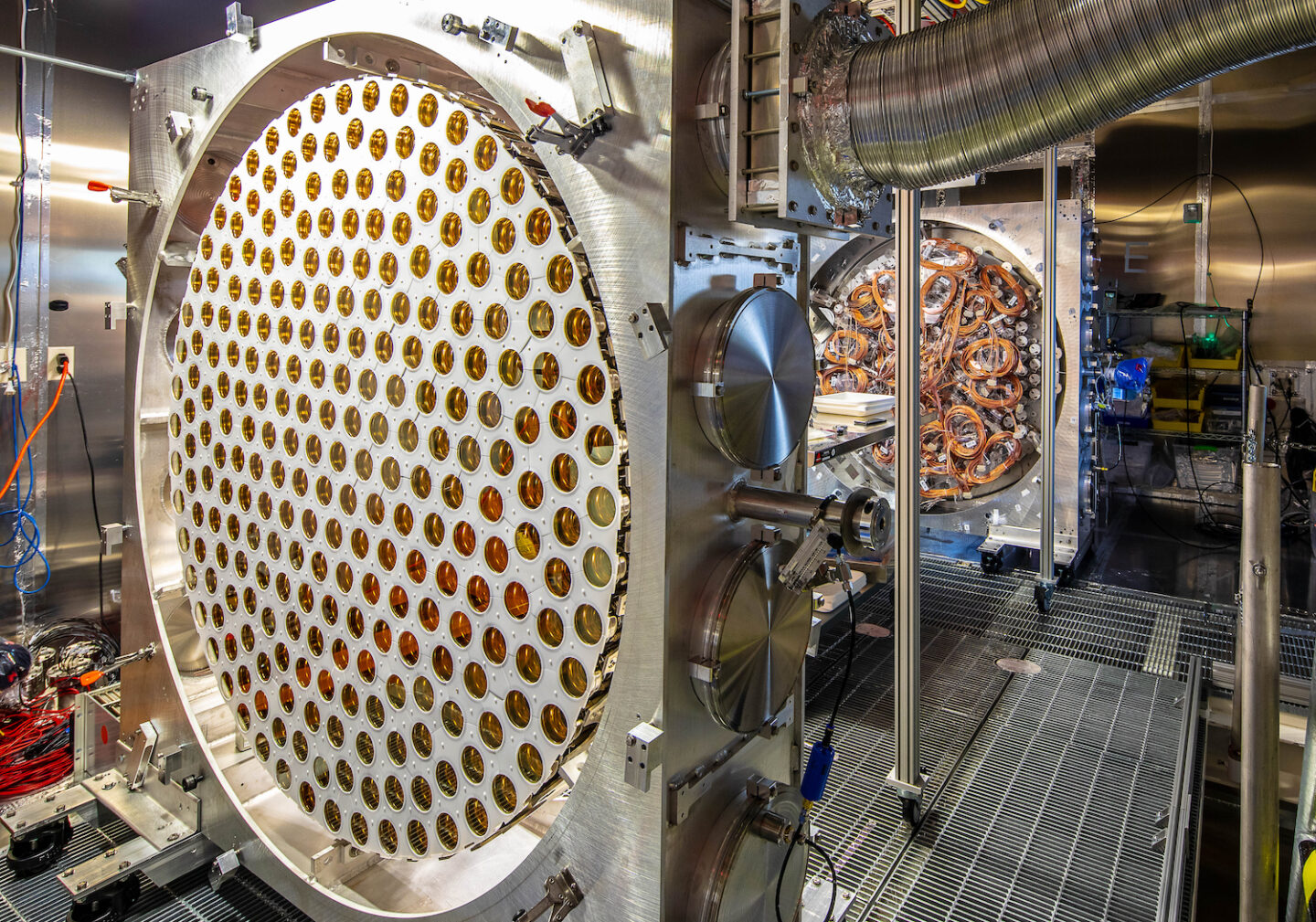

Located deep in the Black Hills of South Dakota in the Sanford Underground Research Facility (SURF), the uniquely sensitive LZ dark matter detector recently passed a check-out phase of startup operations and delivered first results. In a paper posted July 7 on the experiment’s website, LZ researchers reported that, with the initial run, LZ is now the world’s most sensitive dark matter detector.

Unseen, because it does not emit, absorb, or scatter light, dark matter’s presence and gravitational pull are fundamental to our understanding of the universe. LZ is designed to detect dark matter in the form of weakly interacting massive particles. The experiment is led by Berkeley Lab and has 34 participating institutions and more than 250 collaborators. (For more details about the experiment and first results, see this news release.)

Data collection from the LZ detector began in 2021, and the data pipeline runs 24/7. As LZ’s U.S. data center, NERSC provides support for the data movement, processing, and storage – to the tune of 1PB of data annually. The data comes to NERSC from SURF via ESnet, the Department of Energy’s high performance network dedicated to science; the ESnet6 network connects NERSC to labs, experiments, and facilities globally. LZ also uses NERSC for simulation production and has built custom workflow tools and science gateways that help collaborators organize the experiment and analyze and visualize notable detector events.

“We have had amazing scientists and software developers throughout the collaboration who tirelessly supported data movement, data processing, and simulations, allowing for a flawless commissioning of the detector,” said Maria Elena Monzani, lead scientist at SLAC and deputy operations manager for software and computing on the LZ experiment.

Multiple Stages of Computing

The daily data workflow involves multiple moving parts. Each batch of data collected from the detector is sent up to the SURF surface facility, then on to NERSC where it is processed. The raw and derived data products are archived on NERSC’s HPSS system and also sent to a data center in the United Kingdom for redundancy.

There are multiple stages to the computing aspect of the experiment, Monzani explained.

“First we get the data from the detector, then we do reconstruction of the event, which means we construct physics quantities from the electronic signals,” she said. “We transform whatever we get from the detector into energy, position, charge, and light.”

The research team also does calibrations of the detector, including the neutron and electron sources. “This data we process in the same way to give us information on how the detector is operating,” Monzani said. “Then we can reprocess our research dataset multiple times to take into account what we learn from the calibrations.”

Adventures in Data Processing

Berkeley Lab physicists and computing scientists have been instrumental in software and infrastructure support for the data processing aspect of LZ. For example, Quentin Riffard, a scientist in Berkeley Lab’s Physics Division who is a member of the LZ research team, has spent the last three years creating and applying data processing tools for the experiment.

“LZ produces about 1PB of data/year, which is a lot,” he said. “And in this petabyte, we have a lot of background elements that we need to remove for our analysis. But we might also have a few signal events in there. So our task with the data processing pipeline is to take all the events that we have, extract quantities that we can use, and then apply some cuts to select only signal events and remove the background events.”

The LZ team is also an avid user of Spin, a container-based platform where users can build and deploy edge services on a secure, managed infrastructure at NERSC. “Spin is a very important part of our automated system management. All the LZ databases are hosted on Spin, and Spin is now supporting 100% of the LZ data processing pipeline. It’s been a real game changer for us,” Riffard said.

The LZ experiment is using millions of NERSC hours on Cori and, more recently, Perlmutter, he added. “These days if you really want to do precision physics with a great level of detail, you need a lot of CPUs, and you are not going to get that on your laptop. And not just for data processing but for the Monte Carlo simulations, which is another crucial aspect of what we’re doing.”

Connecting Facilities, Automating Workflow

NERSC and LZ also have a close collaboration through the Superfacility Project, which connects experiments and instruments to computing over the high-speed ESnet network, noted Deborah Bard, group lead for Data Science Engagement at NERSC and Superfacility Project liaison for the LZ experiment. As a member on the Superfacility Project’s science engagement roster, LZ has been involved in testing and adopting a number of innovative data management and movement tools, including real-time computing support, automated data movement, and container-based edge services.

These new capabilities are important because, while LZ has a relatively small data volume on a daily basis, it accumulates a large amount of data over time – and all of this data needs to be moved, stored, archived, and coordinated across multiple sites. In addition, LZ has to get the data to NERSC seven days a week and be confident that the NERSC resources they need will be consistently available.

“It is absolutely critical that the data pipelines from observation to computing operate reliably and perform well 24/7,” said Eli Dart, group lead for Science Engagement at ESnet. “The scientists need to be able to do science, not worry about infrastructure.”

“The LZ team manages its own data, but we focus on making it easier for them to do so,” Bard added, noting that this kind of collaborative model is becoming increasingly important for science teams.

For example, NERSC and LZ identified resiliency as an area that could be improved for both organizations, Bard noted – and their efforts have already yielded rewards.

“We’ve been working with LZ to help them develop resilient workflows and better tolerate when NERSC becomes unavailable due to a power outage or some other reason,” she said. “In turn, they’ve been a really strong driver and a motivating use case for us to make ourselves more resilient and make sure that our resources are available when needed. So they are using us to get their science done, but we are using them to improve our own infrastructure and policies and services.”

Working with LZ has also helped NERSC build in more flexibility. “When they are taking data it’s a steady stream, but when they do calibrations that’s a lot more data. So we have to be able to give them more capability when they need it,” Bard said, adding that the partnership has also benefited from LZ’s enthusiasm for Spin.

“LZ needs to be able to monitor their data quality and data movement 24/7, so they make heavy use of Spin and have been involved in developing monitoring frameworks and having web portals for their collaboration to monitor their pipelines and data quality,” Bard said. “At the same time, LZ has been very useful for driving requirements for Spin.”

In the long run, she added, “that is the whole point of having these close science engagements: It is mutually beneficial.”

NERSC and ESnet are DOE Office of Science user facilities.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.