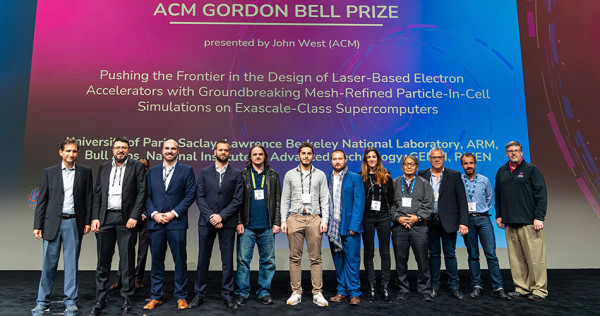

A Lawrence Berkeley National Laboratory (Berkeley Lab) research team and their international collaborators were honored with the ACM Gordon Bell Prize on November 17 during SC22 for their work in advancing the design of particle accelerators, described in their paper, “Pushing the Frontier in the Design of Laser-Based Electron Accelerators with Groundbreaking Mesh-Refined Particle-In-Cell Simulations on Exascale-Class Supercomputers.”

This work was one of two projects featuring Berkeley Lab scientists that were selected as finalists this year for the prestigious Gordon Bell Prize, which is given out annually by the Association for Computing Machinery. The award, which comes with $10,000 in prize money, recognizes outstanding achievements in high-performance computing applied to challenges in science, engineering, and large-scale data analytics.

Learn more about both of these ground-breaking projects below.

Pushing the boundaries of laser-based electron accelerators

A team led by Jean-Luc Vay, a senior scientist and the head of Berkeley Lab’s Accelerator Modeling Program in the Accelerator Technology and Applied Physics Division, collaborated with the team of Henri Vincenti, physicist and research director at CEA (the French Alternative Energies and Atomic Energy Commission) and other key organizations on a project that could help revolutionize radiotherapy treatments and, eventually, lead to major improvements for the next generation of particle accelerators devoted to high-energy physics.

Their research presents a first-of-kind mesh-refined massively parallel particle-in-cell code for kinetic plasma simulations optimized on a quartet of supercomputers that includes Frontier, Fugaku, Summit, and Berkeley Lab’s Perlmutter.

“We’re really pushing here – in three directions,” Vay said.

On the physics side, the collaborators are looking to improve the state-of-the-art in particle accelerators while at the same time operating on the cutting edge of algorithms and developing a versatile code that is compatible with different types of supercomputers.

The simulation code – called WarpX – “enabled 3D simulations of laser-matter interactions on Frontier, Fugaku, and Summit, which have so far been out of the reach of standard codes,” the researchers write in the paper’s abstract. “These simulations helped remove a major limitation of compact laser-based electron accelerators, which are promising candidates for next generation high-energy physics experiments, ultra-high dose rate FLASH radiotherapy, and other applications.”

In FLASH radiotherapy, a therapeutic dose is delivered in a very short time (a few seconds) at a much higher dose-rate than in conventional treatment protocols. After decades of anecdotal reports on the FLASH radiotherapy effect, more recent experiments have demonstrated a strong difference of sensitivity of healthy and unhealthy tissues to ionizing radiations when delivered within short and bright pulses.

However, to date mechanisms behind the benefits of ultra-high dose rate radiotherapy have not been elucidated. This understanding requires a deeper insight into the basis of radiation toxicity on biological samples at disparate timescales ranging from femtoseconds (fs) (molecule excitation) to the hour (cellular response) and beyond.

Toward this end, the teams led by Vay and Vincenti used complex WarpX simulations to model a novel conceptual design of a laser-based electron injector and accelerator that can produce highly charged and very short particle electron beams of high quality that have the potential to deposit a large enough therapeutic dose at ultra-high peak dose rates in tumor cells without damaging other cells.

By using plasma mirrors, the team’s accelerators can deliver a much larger dose of energy in a much shorter time than current conventional methods. Moreover, in order to penetrate deeper into the human body and treat tumors, very large and costly accelerating structures are currently necessary.

“With laser plasma accelerators,” Vincenti said, “we can shrink the distance and enable very high-energy electrons to … democratize, let’s say, FLASH radiotherapy in medical centers at a cheaper cost and at a smaller scale.”

Other potential applications include the building of miniature x-ray free electron lasers for the science of ultrafast phenomena, as well as the development of particle colliders that could be more compact and – the hope is – cheaper than the current generation. To be sure, putting the latter into practice may still take decades.

“This is, I would say, really at the research level of maturity, and computer simulations are key to establishing that it can be done,” Vay said. “It’s very promising.”

Modeling the project presented a challenge. The main obstacle in modeling the plasma mirror design stemmed from the huge interval of spatial and temporal scales, the scientists noted. To help overcome that hurdle, the team used WarpX, a code developed via their ECP project, Vay noted. WarpX combines the adaptive mesh refinement (AMR) framework AMReX, developed at Berkeley Lab, with novel computational techniques. AMR is akin to a computational microscope, allowing researchers to zoom in on specific regions of space. Berkeley Lab’s Andrew Myers and Weiqun Zhang, both key members of the AMReX development team, are also co-authors on the WarpX paper.

“AMReX is a numerical library that helps us implement some physical block-structured mesh refinement algorithms,” said Axel Huebl, a computational physicist at Berkeley Lab who is now a lead developer of WarpX and co-lead author of the Gordon Bell paper.

This short video celebrating the unveiling of NERSC’s Perlmutter system features Axel Huebl and WarpX simulations of particle accelerators.

Though work on this paper began in the spring of last year, its foundation was laid through the group’s affiliation with the ECP. “It’s really years of hard work from many people,” Vay said, adding that the team recently also had to pull long shifts to make efficient use of the various supercomputers when they gained access for brief windows of time.

Thanks to the ongoing collaboration through CEA and RIKEN, the researchers had full access to Fugaku, located in Japan, for 12-hour spans. “We had to stay connected on the machine to follow our simulations,” said lead author Luca Fedeli, a plasma physicist, WarpX developer, and currently a post-doc at CEA. “So we had like 12-hour Zoom calls at weird times.”

Co-authors on this Gordon Bell paper include France Boillod-Cerneux, deputy at the CEA’s Fundamental Research Department regarding numerical simulations; Thomas Clark, a Ph.D. student at CEA; Kevin Gott, a NERSC staff member working in the User Engagement Group; Conrad Hillairet, with ARM; Stephan Jaure, with Atos; Adrien Leblanc, with ENSTA Paris; Rémi Lehe, a researcher at Berkeley Lab; Myers, a member of the Center for Computational Sciences and Engineering at Berkeley Lab; Christelle Piechurski, the Chief HPC project officer for GENCI; Mitsuhisa Sato, deputy director of RIKEN Center for Computational Science; Neil Zaïm, a postdoc at CEA; and Zhang, a senior computer systems engineer at Berkeley Lab.

Large-scale searches within hours

In a paper titled “Extreme-scale many-against-many protein similarity search,” Oguz Selvitopi, a research scientist with the Applied Math and Computing Research Division in Berkeley Lab’s Computing Sciences Area, and his colleagues described how they “unleash the power of over 20,000 GPUs” on the Summit supercomputer at the Oak Ridge Leadership Computing Facility to perform an “all-vs.-all protein similarity search on one of the largest publicly available datasets with 405 million proteins.”

“Similarity search is one of the most fundamental computations that are regularly performed on ever-increasing protein datasets,” the researchers write in the paper’s abstract. “Scalability is of paramount importance for uncovering novel phenomena that occur at very large scales.”

Among the fields that make regular use of such search efforts: comparative genomics. That’s the study of the evolutionary and biological relationships between different organisms by exploiting similarities over the genome sequences.

“A common task, for example, is to find out the functional or taxonomic contents of the samples collected from an environment often by querying the collected sequences against an established reference database,” the researchers write.

As more genomes are sequenced, the ability to build fast computational infrastructures for comparative genomics becomes ever more crucial.

“As your dataset gets bigger, the problem becomes more and more difficult,” said Selvitopi, the lead author on the project.

The challenge of such searches is twofold: the computational intensity and the high memory footprint.

Selvitopi and his colleagues overcame this memory limitation using innovative matrix-based blocking techniques, without introducing additional load imbalance, which impacts the ability to scale data processing tasks across the system’s compute nodes. “What we do is perform the search incrementally,” he explained. Essentially, the scientists form the similarity matrix “block by block,” thereby reducing the memory footprint “drastically,” Selvitopi said.

Thanks to the high performance computing software Selvitopi and his colleagues developed, researchers could soon complete previously labor-intensive searches in a fraction of the time. Their novel open-source software cuts the time-to-solution for many use cases from weeks to a couple of hours.

Until now, researchers in this field would have had to resign themselves to using low-quality methods to perform the search because they didn’t have the time or computational power, Selvitopi said. Those researchers can now conduct those searches at “any scale they want” and will finish “within hours or even minutes,” he said.

One of the most challenging parts for Selvitopi and his team was the scale. He had never tested anything at this scale before and had to be extra careful, he said, because at that magnitude “when something goes wrong” it is very “difficult to find the reason.”

As a longtime self-proclaimed HPC guy, dating back to the start of his Ph.D. studies, Selvitopi is excited about making the shortlist for the sought-after Gordon Bell award. He found out about the honor during archery practice and suddenly it became a lot harder to hit the target.

“I was shooting really well and then I saw that news,” Selvitopi said with a laugh.

Co-authors on this research paper include: Saliya Ekanayake, a senior software engineer at Microsoft and previously a postdoctoral fellow in the Performance and Algorithms Research group at Berkeley Lab; Giulia Guidi, a project scientist in the Applied Math and Computing Research division at Berkeley Lab; Muaaz Awan, an application performance specialist at the National Energy Research Scientific Computing Center (NERSC); Georgios Pavlopoulos, director of bioinformatics at the Institute of Fundamental Biological Research of the Biomedical Sciences Research Center; Ariful Azad, director of graduate studies for the Department of Intelligent Systems Engineering at Indiana University; Nikos Kyrpides, a computational biologist and senior scientist at the Department of Energy Joint Genome Institute; Leonid Oliker, a senior scientist and group lead of the Performance and Algorithms Research Group at Berkeley Lab; Katherine Yelick, a senior faculty scientist at Berkeley Lab who leads the ExaBiome project on Exascale Solutions for Microbiome Analysis, part of DOE’s Exascale Computing Project (ECP); and Aydin Buluç, a senior scientist in the Applied Math and Computing Research Division at Berkeley Lab.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.