A team of researchers from Lawrence Berkeley National Laboratory (Berkeley Lab), in collaboration with several experimental and computational neuroscientists, recently published their novel software package, Neurodata Without Borders (NWB), in the journal eLife. The team created the NWB data language to enable accurate communication about the massive diversity of neurophysiology data across the community. The robust, extensible, and sustainable software architecture is based on their own Hierarchical Data Modeling Framework.

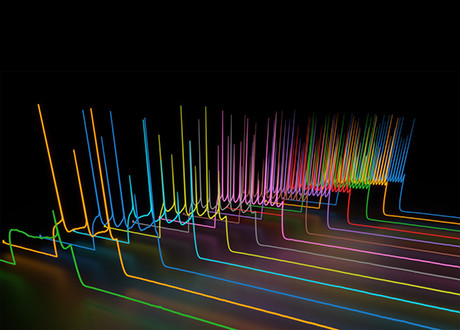

Understanding how the brain works and gives rise to thoughts, memories, perception, and consciousness remains one of the greatest challenges in science. To tackle this challenge, neurophysiologists run experiments that measure neuronal activity from different parts of the brain and relate that activity to sensation and behavior. These experiments generate large, complex, and diverse datasets at terabyte scale. The size and complexity of these datasets are expected to grow significantly under recent global investments in neuroscience research, such as the BRAIN Initiative and the E.U. Human Brain Project. As the deluge of scientific data continues, the need for standardization and the development of common tools for analysis and modeling is paramount for large, collaborative projects like the Genome Project that pool data with the same format into massive databases.

Neurophysiology experiments are conducted using diverse species and a myriad of instruments and customized workflows. But there was no widely adopted standard or format that could accommodate all the metadata needed to aggregate and meaningfully analyze the data. This is a serious impediment to understanding the brain, and the lack of a common data language makes the time and effort required for data discovery and analysis unnecessarily high. Further, it has inhibited collaboration and made replication of specific experiments almost impossible, significantly slowing overall progress in the field. To solve these problems and to better understand the brain, it is helpful if the resulting data is findable, accessible, interoperable, and reusable (FAIR).

To address this, the Berkeley Lab team developed NWB, a broad initiative to standardize neurophysiology data, to serve as a standardized language for neurophysiology data and data-descriptors (i.e., a data language) that enables neuroscientists to effectively describe and communicate about their experiments and share the data. “NWB is used for all neurophysiology data modalities collected from species ranging from flies to humans during diverse tasks.” said Kristofer Bouchard, co-principal investigator for the project, leader of the Computational Biosciences Group in the Scientific Data Division, and a staff scientist in the Biological Systems and Engineering Division. “NWB enables the DANDI (Distributed Archives for Neurophysiology Data Integration) data repository to support collaborative data sharing and analysis.”

A unique feature of NWB is its ability to support custom extensions to the standard created by the community. “We developed the NWB Extensions mechanism to permit NWB to co-evolve with the needs of the community as new experimental paradigms, technologies, and data types are created,” said Ryan Ly, one of the primary software engineers for NWB.

NWB is built on top of the Hierarchical Data Modeling Framework (HDMF) that the team developed during this project. HDMF modularizes the process of data standardization into three main components: data modeling and specification; data I/O and storage; and data interaction and data APIs. HDMF insulates and integrates these various components to enable standards to provide support throughout the data life cycle. This approach supports the flexible development of data standards and extensions, optimized storage backends, and data APIs, while allowing the other components of the data standards ecosystem to remain stable. “Identifying and separating these key areas of concern has been essential not just from a software engineering perspective, but more importantly from a sociological perspective,” said Oliver Rübel, the principal investigator of the NWB project. “It allows us to focus discussions with the community and at the same time provides developers the flexibility to explore a broad range of technical solutions.”

The robust capabilities of the NWB architecture provide the basis for a community-based neurophysiology data ecosystem but may also provide a blueprint for creating complete data environments across other fields of biology. Indeed, the underlying HDMF technology is the foundation for a project exploring the storage of standardized data and metadata associated with the National Microbiome Data Collaborative (NMDC), as well as for AI model training.

The Neurodata Without Borders 2.0 project, led by Berkeley Lab in collaboration with the Allen Institute for Brain Science and multiple neuroscience labs, was awarded an R&D 100 Award in 2019 by R&D World magazine. The NWB pilot project was initiated and driven by neuroscience community efforts and supported by several foundation and industry partners. The current NWB project (NWB2.0) is a complete re-architecting that enables NWB to address challenges associated with both scientific software engineering and neuroscience data. NWB2.0 has received funding from the Kavli Foundation, the Lab Directed Research and Development Program, the National Institutes of Health, and the Simons Foundation.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.