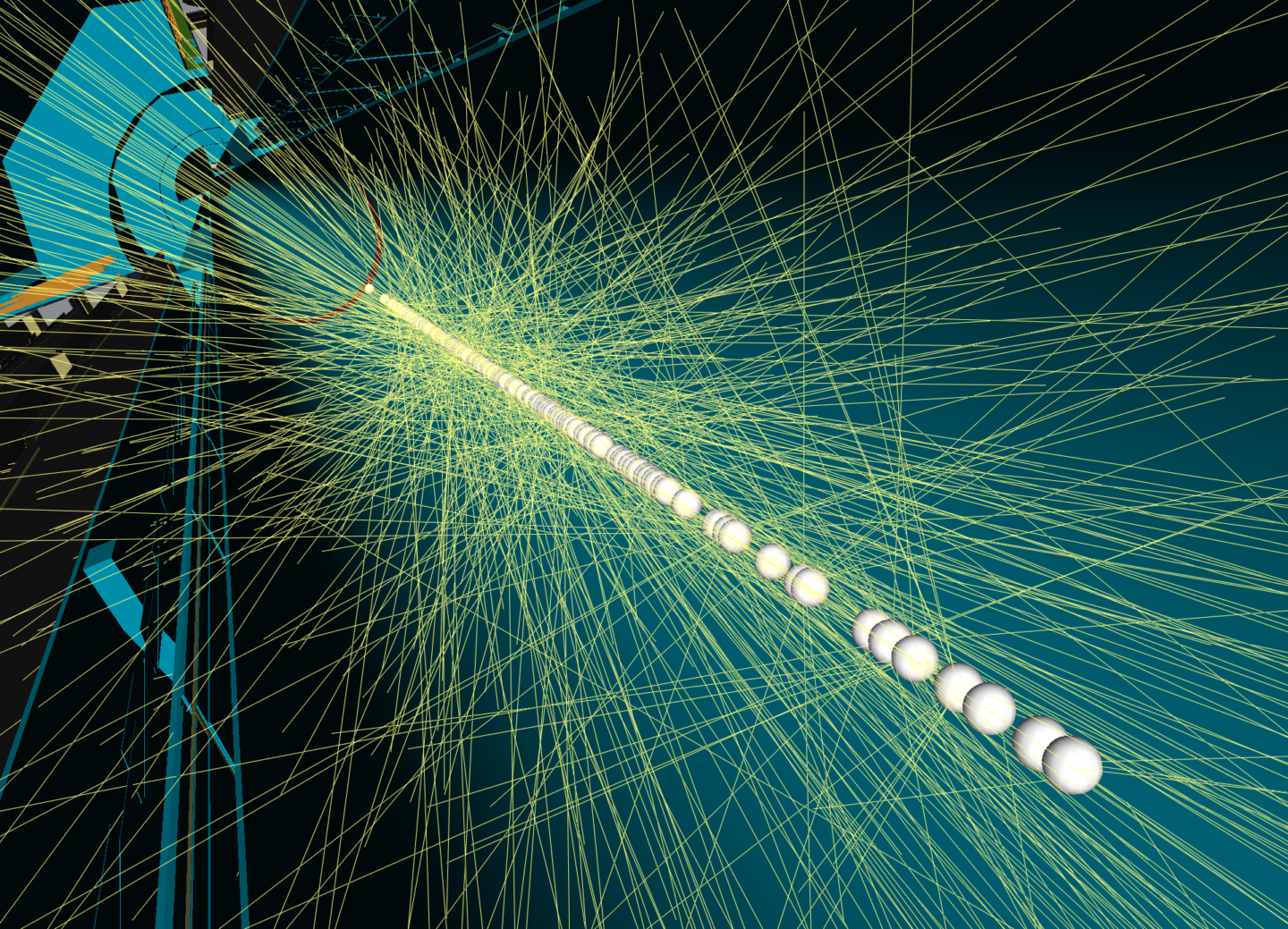

After a 40-year international search, the Higgs boson discovery at the Large Hadron Collider at CERN in 2012 was helped by machine learning. Since then, high energy physics (HEP) has applied modern machine learning (ML) techniques to all stages of the data analysis pipeline, from raw data processing to statistical analysis. HEP data analysis has unique requirements and the exabyte-scale datasets require the development of ML techniques bespoke for HEP.

The Scientific Data Division’s Paolo Calafiura recently co-edited the book Artificial Intelligence for High Energy Physics, a self-contained, pedagogical introduction to using ML models in HEP. The book was published in March 2022 by the World Scientific Publishing Company and is available online and in printed form.

Written by some of the foremost experts in their fields and organized by physics application, the book seeks to fill the gap between AI/ML textbooks and research papers discussing AI/ML applications in HEP by providing students, postdocs, and experienced physicists with an overview of state-of-the-art AI/ML applications to apply to their own work. The book may also be useful to ML researchers who want to get acquainted with experimental data analysis challenges and methods.

“While still evolving fast, the field of AI for HEP has reached a level of maturity and accumulated knowledge that makes it difficult for newcomers to get the ‘big picture’ of what has been done and what remains to be done,” said Calafiura, senior scientist and lead of the Physics and X-Ray Science Computing Group in the Scientific Data Division. “This is the book that I often wished that I had in my office when I was introducing a new student or postdoc to AI for HEP.”

With the booming growth of AI/ML approaches in industry, modern AI/ML methods, such as deep learning, have become ubiquitous in computational HEP and have transformed the way that HEP is done. For example, Boosted Decision Trees (BDTs), a method used a decade ago for the Higgs Boson discovery, were an integral part of many HEP analyses but are increasingly being replaced by deep neural networks. Accelerated AI/ML platforms for training and inference have enabled the use of deep learning architectures, like Convolutional Neural Networks, which outperform traditional algorithms on image data, and Graph Neural Networks, which are ideally suited to the complex geometries of HEP detectors. “There are many applications and the book covers most standard ML methods from BDTs to normalizing flows,” said Calafiura. “All the methods are presented using HEP examples.”

Calafiura and his co-editors coordinated a team of 33 extremely busy scientists who volunteered their time to write 19 pedagogical reviews of their field and then spent even more time reviewing each other’s contributions and harmonizing the book contents. “We were lucky to have a committed–though somewhat inexperienced–editorial team and lots of help from the chapter authors,” said Calafiura.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.