In our ongoing quest to better map and understand the universe, scientists compare models and simulations with different cosmological and astrophysical parameters against actual observations – much like detectives would compare a suspect’s DNA to those in their database.

“You want to find the fingerprint of our universe,” said Zarija Lukic, group lead of the Computational Cosmology Center at Lawrence Berkeley National Laboratory (Berkeley Lab) and a contributor to the ExaSky project, an effort of the Department of Energy’s (DOE) Exascale Computing Project (ECP) to extend the capabilities of cosmological simulation codes to efficiently use exascale resources.

But powerful simulations, in general, require lots of computer time, making them computationally expensive. The potential solution: surrogate models, often known as emulators, which can provide fast approximations of more computer-intensive simulations.

Toward this end, advancing surrogate modeling is one of the four main focus areas of ExaLearn, an ECP co-design center bringing machine learning to the forefront of the ECP effort. Since its launch in 2018, ExaLearn – which is now starting to wind down as the ECP program comes to its conclusion this year – has been working on using exascale computing to do training for artificial intelligence and machine learning and to improve ECP exascale codes by incorporating AI and machine learning.

“What the ECP wanted to do was set up a project that would take advantage of the exascale computing machines to focus on scientific AI and machine learning, which is very different than what industry is typically focused on – the challenges are different,” said Peter Nugent, AMCR Division Deputy for Science Engagement at Berkeley Lab as well as the Lab’s principal investigator on ExaLearn and head of surrogate modeling for the project. “So there was a real need there.”

Before machine learning and AI, scientists would use statistics-based types of surrogate modeling, Lukic said. In the past, they could get away with fewer computational resources because the data was of worse quality.

“But now, as observations are becoming better and better, as statistical errors on different measurements are getting down to the 1% level, we need to have really accurate simulations and modeling,” Lukic explained. “And our computational requirements, if you want to do them from first principles, are just exploding.”

From low res to high res, from black and white to color

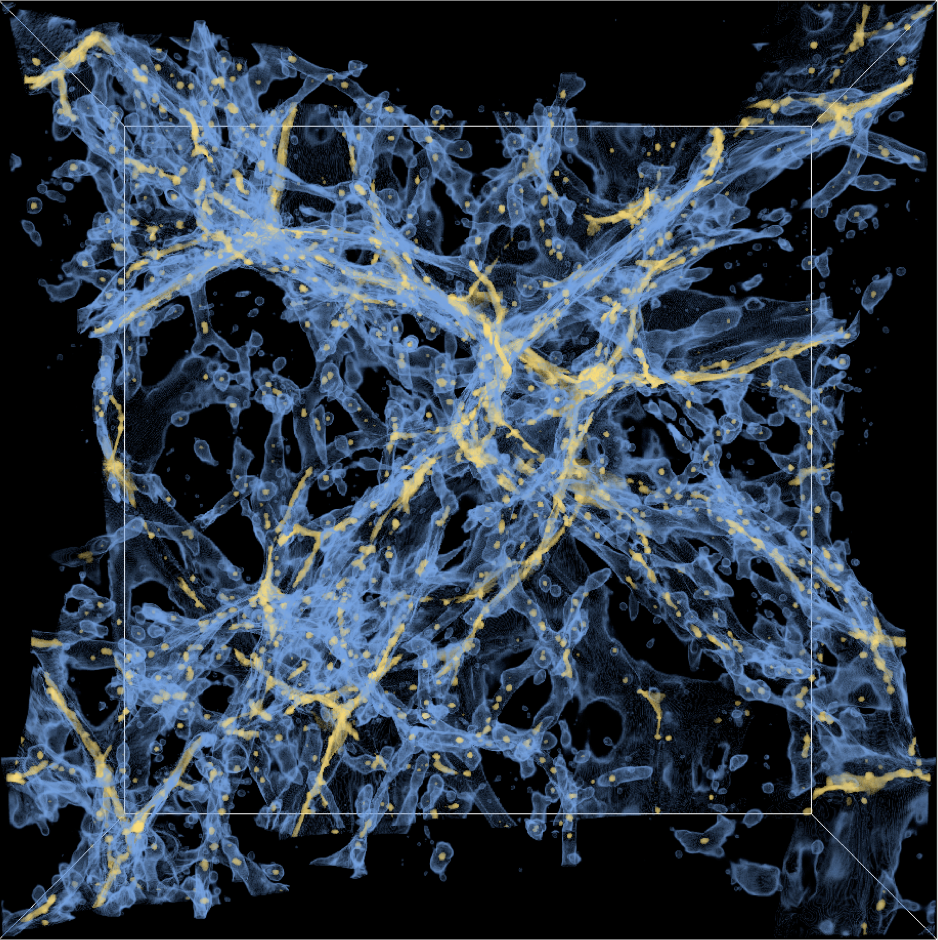

Nugent and his ExaLearn colleagues have been concentrating on cosmology simulations, focusing most tightly on working with the ExaSky project and helping them achieve faster results through surrogate models. The ExaSky simulations – with the help of the HACC and Nyx cosmological simulation codes – are used to aid in the DOE High Energy Physics Cosmic Frontier experiments to reduce systematic uncertainties in the measurements of the cosmological parameters.

“The challenge observationally is that our statistics are incredibly good, better than a percent level, so our measurements are dominated by systematic biases; if I look in this part of the sky and it’s cloudy this night, I don’t get quite as good data as if I look over here,” Nugent said. “So I’m not going to see as many galaxies as I expect here.”

That means scientists have to figure out a way to correct for that when they’re trying to make these measurements, which basically try to tie the entire sky together, Nugent continued. The solution? Simulations.

“You take the simulation, you put it out there, and you say, ‘Okay, if I didn’t quite observe these guys as well as these guys in my simulation, how would that bias the result I get for the cosmology I’m trying to measure?’” Nugent said. “And then when I get that bias, I say, ‘Okay, well, this is what happened in my observations. So I’ll correct for it.’”

That’s where the ExaLearn co-design center comes into play. In essence, Nugent and his team are trying to generate fake universes rather than run the full simulation. They take lots of simulation data, train on it, and learn to interpolate between various cosmologies.

“We learn to go from low-fidelity models, which are quick and dirty to run, to higher-fidelity models, and we learn to take lower-resolution simulations and translate them to higher-resolution simulations,” Nugent said.

He likened the effort to the way film director Peter Jackson took old black and white footage and colorized it for his 2018 World War I documentary “They Shall Not Grow Old,” bringing the conflict to life in never-before-seen vivid detail. Jackson used a lot of machine learning to transform the “herky-jerky” original footage into color, smooth it out, and keep the resolution, Nugent said.

“In a similar way, we’re trying to do the same thing,” he said. “We’re trying to go from black and white to color, low- to high-fidelity physics. We’re trying to go from this herky-jerky, sort of lower resolution, lower speed, to much smoother, high speed, going to high resolution.”

To do so the scientists take several low-resolution simulations and high-resolution simulations and use artificial intelligence and machine learning to figure out how to jump from one to the other.

“And that way, we can then just take a low-res simulation and generate a high-res version really quickly without actually running it, which takes a tremendous amount of compute time,” Nugent said.

That means a 10-terabyte simulation of the universe that would ordinarily take millions of hours to generate on Perlmutter can now be generated in milliseconds.

The surrogate modeling efforts haven’t been without their challenges, however.

“The simulations that the ExaSky team does are some of the largest simulations that are run at any of the leadership class supercomputing facilities, and the volume of data that they produce is incredibly large,” Nugent said. “So we had to learn how to distribute that data across an entire exascale machine in order to do the training to generate these surrogate models.”

Peter Harrington, a machine learning engineer at Berkeley Lab’s National Energy Research Scientific Computing Center (NERSC), worked with the Lab’s cosmologists and NERSC’s computing experts on the ExaLearn effort. The group experimented with a lot of generative modeling techniques to try and find ones that worked well in statistically matching the cosmology simulation data, he explained.

“A major challenge was that, at the time, the state-of-the-art generative models (GANs) were notoriously difficult to train due to instability and variability,” he added, noting that running the same training with different random numbers could give very different results, meaning one run would succeed and another would fail.

The group worked a lot on trying to improve the stability of these models, Harrington said, by incorporating techniques from “mainstream” machine learning literature as well as some custom methods they developed that were informed by the physical understanding of the simulations and data they were working with.

NERSC was central to ExaLearn’s surrogate modeling work right from the start. In the early days, scientists took advantage of the GPU testbed NERSC set up on Cori in advance of Perlmutter.

“We did a lot of our initial training there,” Nugent said. “A lot of the preliminary work that we did before we transitioned over to the leadership class facilities (was) done there, as well as on Perlmutter once it first became available. We got access to work on it early on.”

ExaLearn’s ‘really good tools’ to help broad range of future applications

The ExaLearn effort has also included working on what’s called an inverse problem.

“We can actually look at a simulation of the universe and tell you what the cosmology is – using machine learning – very, very quickly,” Nugent said. “We did it well and we did it training on some of the largest machines out there.”

In other words, the machine learning technique can, in a fraction of a second, tell scientists what the cosmological parameters were that generated the simulation.

“That’s quite useful because, eventually, you could imagine doing something like that with the observational data,” Nugent said. “Just looking at the data that we collect from one of these surveys, like the Rubin Observatory or the Dark Energy Spectroscopic Instrument, running this machine learning thing where we’ve figured out all the biases and done training like that and just say, ‘And these are the cosmological parameters.’”

The code the scientists used, LBANN, was developed at Lawrence Livermore National Laboratory and uses both model parallelism and data parallelism, Nugent explained.

Data parallelism in this context means having a bunch of GPUs on one machine and being able to send some of the data to each of them. “And they can go on their merry way without talking to each other and then when they’re done processing that data, they spit back a result,” Nugent said. Model parallelism, on the other hand, involves taking the model and distributing it across the GPUs, which means they’re each doing some chunk with lots of communication.

“In order to do anything good with the cosmology simulations, we determined that you need both model and data parallelism,” Nugent said. “There’s just no way to be clever and shrink it down so you can solve it on a single GPU or even on a node of four or six GPUs. You really need to use the whole machine.”

To Nugent, six years after the rollout of ExaLearn, the future looks promising. Scientists now have “some really good tools” to use on these next-generation exascale computing machines, he said.

“I think we can now go out and tackle a whole bunch of other problems that DOE is interested in,” Nugent said.

This might involve combining some of the other ExaLearn application pillars, which, aside from surrogate modeling and inverse solvers, include ways to use machine learning to develop control policies and design strategies. Nugent and his colleagues, for instance, would like to develop a surrogate model that’s so good it would allow scientists to plug in several factors and model the next virus outbreak to inform officials what the best policy is to keep the lowest rate of disease spread.

“And we’re using reinforcement learning, control, design, and surrogates, all in this process now,” Nugent said. “So it’s sort of come full circle on how you use all of these to tackle this.”

Even beyond epidemiology, future projects could range from fusion to drug design and making new polymers.

“We’ve stuck our toe in the water with ExaLearn,” Nugent said. “I think things are just going to explode now with AI in science on exascale-sized computers.”

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.