Experimental, observational, and theory-based scientific data present complex challenges in quality assurance, quality control, data integration, data reduction, analysis and visualization, real-time decision-making, human-in-the-loop monitoring, and steering. To address these challenges, we create integrated systems to manage data from instruments and computations. These systems perform streaming data analytics over high-speed wide-area networks such as the Energy Sciences Network (ESnet), processing data in real time at high-performance computing (HPC) facilities such as the National Energy Research Scientific Computing Center (NERSC). Statistical methods and/or surrogate models are employed to process the data concurrently and close the loop to communicate back to the data source. To store and archive the data for offline analysis, intelligent and automated data and resource management architectures are created.

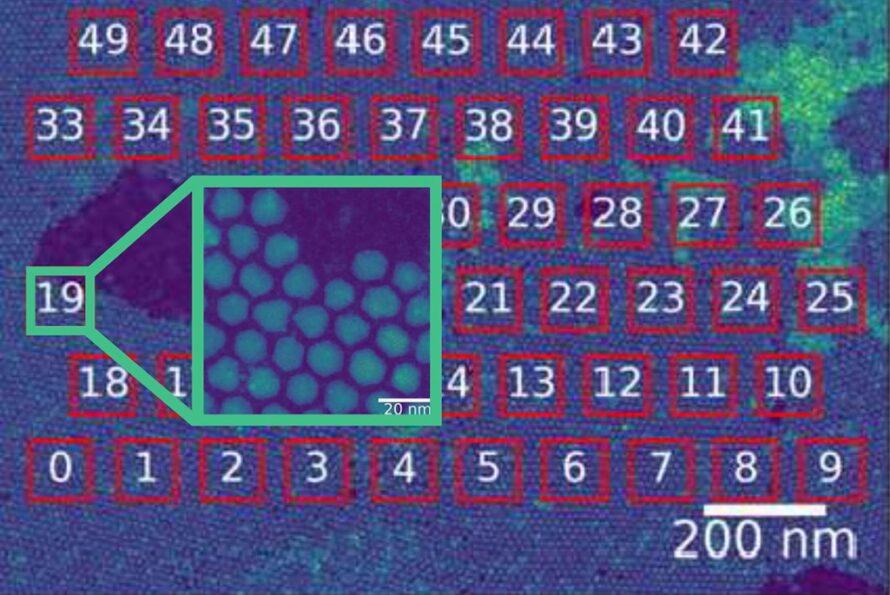

The Superfacility concept is a framework for integrating experimental and observational instruments with computational and data facilities. Data produced by light sources, microscopes, telescopes, and other devices can stream in real time to large computing facilities where it can be analyzed, archived, curated, combined with simulation data, and served to the science user community via powerful computing, storage, and networking systems. The NERSC Superfacility Project is designed to identify the technical and policy challenges in this concept for an HPC center. Contact: Debbie Bard

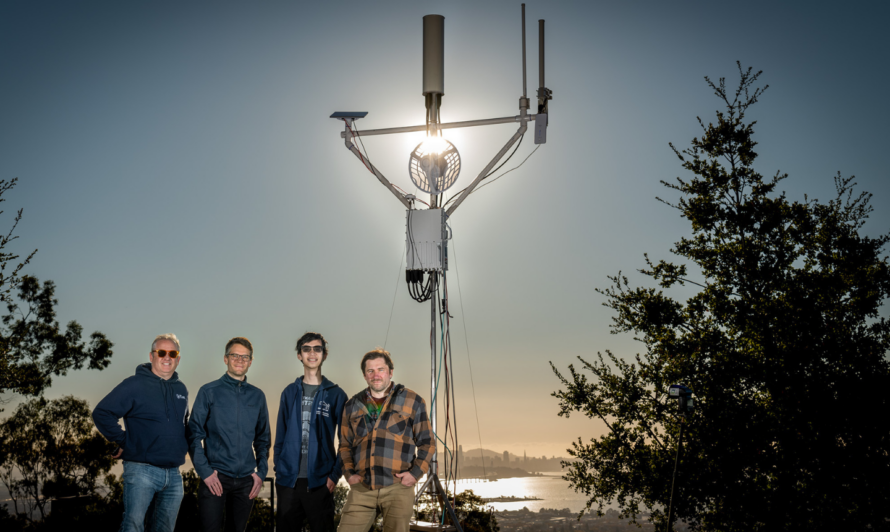

In Earth and environmental sciences, applications such as resilient infrastructure, predictions of ecosystem responses to environmental changes and disturbances, and monitoring of energy resources need to collect heterogeneous data from field environments and infer scientific insights or make decisions based on predictions generated by models. This project develops a solid foundation for self-guiding field laboratories (SGFL) to facilitate adaptive measurements driven in near-real-time by synthesizing lab and field observations with models. Contact: Yuxin Wu

A collaboration between staff at Energy Sciences Network (ESnet) and Thomas Jefferson National Accelerator Facility (Jefferson Lab), the ESnet-JLab FPGA Accelerated Transport, or EJFAT (pronounced “Edge-Fat”), prototype is designed to seamlessly integrate edge and cluster computing in order to allow data from multiple types of scientific instruments to be streamed and processed in near real time by multiple HPC facilities — and, if needed, to redirect those data streams dynamically. Contact: ESnet Science Engagement

As the number, types, and capabilities of scientific sensors increase, the Wireless Faster Data for Science (wFDs) project extends ESnet’s high-speed network to support scientists working in remote and resource-challenged environments where fiber backbone cannot be extended. Leveraging advanced wireless technologies such as low-Earth orbit constellations, 5G, private citizen band radio system cellular, mmWave, and Internet-of-Things tools like long-range (LoRa) mesh networks, ESnet aims to eliminate geographical constraints for field scientists, similar to the support provided for laboratory scientists across the DOE complex. Additionally, real-time data processing capabilities at high-performance computing (HPC) facilities such as NERSC further enhance the ability of scientists to analyze and visualize data efficiently, thereby advancing scientific research and collaboration. Contact: Andrew Wiedlea