This October 22 Berkeley Lab Science in Motion video explains why the EJFAT prototype represents a significant breakthrough in real-time streaming.

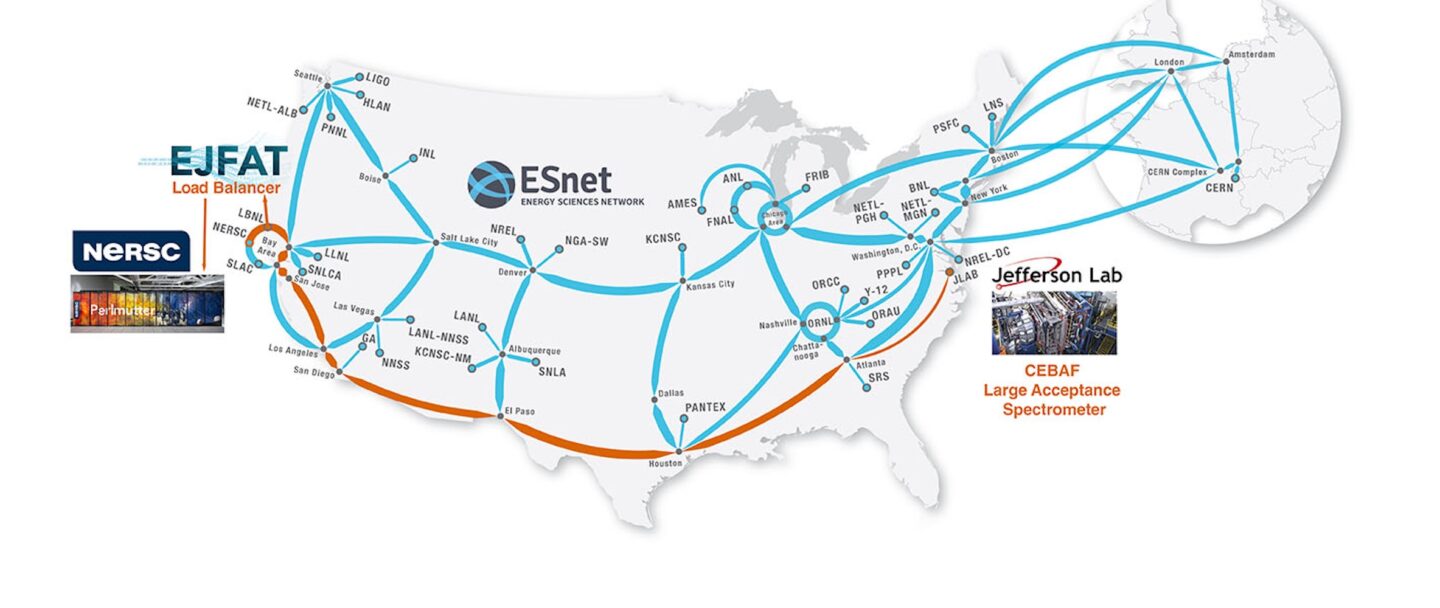

SEPTEMBER 19, NEWPORT NEWS, VA – In April 2024, scientists at Thomas Jefferson National Accelerator Facility (Jefferson Lab) clicked a button and held their collective breaths. Moments later, they exulted as a monitor showed steady saturation of their new 100 gigabit-per-second connection with raw data from a nuclear physics experiment. Across the country, their collaborators at Energy Sciences Network (ESnet) were also cheering: the data torrent was streaming flawlessly in real time from 3,000 miles away, across the ESnet6 network backbone, and into the National Energy Research Scientific Computing Center’s (NERSC‘s) Perlmutter supercomputer at Lawrence Berkeley National Laboratory (Berkeley Lab).

Once it reached NERSC, 40 Perlmutter nodes (more than 10,000 cores) massively processed the data stream and sent the results back to Jefferson Lab in real time for validation, persistence, and final physics analysis. This was achieved without the need for any buffering or temporal storage and without experiencing data loss or latency-related problems. (In this context, “real time” means streamed continuously while processing is performed, with no significant delays or storage bottlenecks.)

This was only a test — but not just any test. “This was a major breakthrough for the transmission and processing of scientific data,” said Graham Heyes, Technical Director of the High Performance Data Facility (HPDF). “Capturing this data and processing it in real time is challenging enough; doing it when the data source and destination are separated by distances on continental scales is very difficult. This proof-of-concept test shows that it can be done and will be a game changer.”

A Technological Leap

In recent decades, people have become accustomed to what once felt like magic — the ability to stream video meetings, concerts, sports, and other events as they happen, to our computers and phones in high resolution, without jitter or lag. However, that technological leap, from broadcasting TV signals over the air to high-definition digital video over fiber-optic cables, took more than 50 years. And large-scale scientific research, involving data sets several orders of magnitude larger than a Netflix movie or YouTube livestream, still has hurdles to clear before achieving such ease of use.

It is much harder to stream science than science-fiction movies. The ability to execute what sounds like a simple task in 2024 – turning on an instrument and watching the output as easily as Californians can watch a Florida rocket launch live – is a dance of far more complex bandwidth and performance needs. Right now, most large-scale instrument data must first be stored and then converted into files that can be processed and analyzed later.

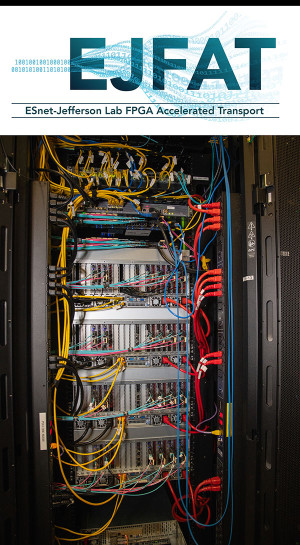

The April 2024 test represented the culmination of nearly three years of collaboration between Jefferson Lab and ESnet to develop a novel networking hardware prototype that can connect scientific instruments to computing clusters over a wide-area network such as ESnet’s in real-time. The ESnet-JLab FPGA Accelerated Transport device, or EJFAT (pronounced “Edge-Fat”), uses traffic-shaping and load-balancing Field Programmable Gate Arrays that are designed to allow data from multiple types of scientific instruments to be streamed and processed in real-time (or near real-time) by multiple high-performance computing (HPC) facilities.

Such linking of the vast experimental and computational resources of the U.S. research enterprise is central to a broad initiative from the Department of Energy dubbed the Integrated Research Infrastructure (IRI). The DOE’s vision for IRI is “to empower researchers to meld DOE’s world-class research tools, infrastructure, and user facilities seamlessly and securely in novel ways to radically accelerate discovery and innovation.” (Learn more about IRI from the IRI Architecture Blueprint Activity Final Report.)

Contributing to that vision will be the HPDF project being developed by Jefferson Lab in partnership with Berkeley Lab. The first-of-its-kind resource for data science and research promises to transform the way scientific information is handled, transported, and stored among the many institutions across the DOE Office of Science’s six programs. Data streaming and processing could be key to realizing those ideas.

Connecting the Data Firehose

Stewarded by Berkeley Lab, ESnet is best known as the high-performance network that serves as the “data circulatory system” for the DOE’s tens of thousands of scientific researchers and their collaborators worldwide. In addition to providing advanced networking services, ESnet staff members work closely with scientific researchers and national laboratories’ networking experts to codesign new integrated approaches and solutions. In mid-2021, after learning they were both focused on solving the real-time streaming challenge, ESnet Planning & Innovation team members joined forces with Heyes and his team of scientific computing researchers and developers at Jefferson Lab.

At the time, Heyes was also serving as a subject matter expert for the IRI Architecture Blueprint Activity’s working group on the Time-Sensitive Pattern for science workflows. One of three common patterns identified by the developers of IRI (see ESnet’s meta-analysis report), the Time-Sensitive Pattern covers workflows that require real-time, or near-real-time, low-latency response across more than one facility or resource for timely decision-making and experiment control. Imagine stopping a telescope’s pan through the sky to stay focused on a supernova or quickly adjusting a nuclear fusion experiment’s “shots,” or short plasma discharges, to address plasma behaviors. Linking such unique research assets at multiple labs to function as one efficient “SuperLab” or “Superfacility” are concepts that have informed IRI.

Facilities with time-sensitive workflows include particle accelerators, X-ray light sources, and electron microscopes, all of which are fitted with numerous high-speed A/D data acquisition systems (DAQs) that can produce multiple 100- to 400-Gbps data streams for recording and processing. To date, this processing has been conducted on enormous banks of compute nodes in local and remote data facilities.

EJFAT is a tool for simplifying, automating, and accelerating how those raw data streams are quickly turned into actionable results. It could potentially work for all such instruments with time-sensitive workflows. ESnet and NERSC were awarded a Laboratory Directed Research and Development (LDRD) grant from the DOE to find a way to show the resilience of this approach.

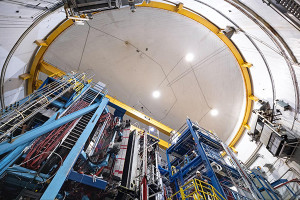

A view from below the CLAS12 detector inside Experimental Hall B at Jefferson Lab.

For the April experiment, the EJFAT collaborators replayed data from Jefferson Lab’s Continuous Electron Beam Accelerator Facility (CEBAF) Large Acceptance Spectrometer (CLAS12). The device was not connected to the detector during a live experiment; instead, it replayed the streaming readout from a previous event. Direct connection of detectors to processing will be a requirement of the Electron-Ion Collider, a next-generation particle accelerator being built at Brookhaven National Laboratory in partnership with Jefferson Lab.

CEBAF’s array of detectors churns out tens of gigabytes of data per second. Newer instruments such as a fusion tokamak, X-ray synchrotron, or particle collider pump out terabytes of scientific data. (A terabyte is 1,000 gigabytes.) For comparison, Netflix estimates that streaming a high-definition movie requires 8 megabytes per second.

It’s hard to store all this data. At CEBAF, for example, researchers typically use a “trigger” system that records only interesting interactions. Triggered events are saved locally, and that subset is either processed locally or sent to DOE HPC centers such as NERSC, but traditional methods have not fully utilized ESnet’s high-speed infrastructure until now. Instead, the trigger system usually records events to files, and processing is limited by retrieval from storage and file-based batch transfers.

With data-stream in-network processing like EJFAT offers, raw data travels in one direction and processed data are streamed back in real time without the need for disk storage or buffering. This method requires no trigger and allows continuous data acquisition, filtering, and analysis. Rather than fetching water bucket by bucket, it’s more like turning on the bandwidth firehose and spraying at full power.

Debbie Bard, Department Head for Science Engagement and Workflows at NERSC, was part of the LDRD team that enabled EJFAT to connect to NERSC. “This is a real milestone, demonstrating a new capability that is sorely needed by many science teams who use NERSC,” Bard said. “We are increasingly seeing a need for automated, cross-facility workflows at DOE HPC facilities, which are often time-sensitive. HPC facilities all need to take occasional outages for maintenance and repairs. When this happens, science teams have to go through a very manual process of figuring out where they can send their data and reconfiguring their workflows to direct to a new site. EJFAT removes all this complexity, allowing scientists to process their data in a truly automated way across many sites, according to their capacity to accept work.”

There are other approaches to solving the streaming problem, most of which rely on software (versus EJFAT’s hardware-accelerated design) and require much more customization. They include Jefferson Lab’s own HOSS solution, which inspired EJFAT and uses RAM-based forward buffers to stream data from a particle physics pipeline. NERSC was also part of a successful project to stream data from X-ray scattering experiments using free-electron lasers (XFELs). The DELERIA project (for Distributed Event Level Experiment Readout and Analysis), another ESnet collaboration that is also part of Berkeley Lab’s LDRD program, seeks to generalize the data pipeline for the Gamma Ray Energy Tracking Array (GRETA) instrument by providing an interface between a streaming detector and data analysis running in a computing environment (see paper). These new approaches complement the file-based data transfer workflows managed by current distributed science projects, such as the University of Chicago’s nonprofit service Globus.

What’s Next

EJFAT’s reliance on scalable hardware acceleration gives it an advantage in performance and speed. In essence, EJFAT acts as a lightning-speed traffic controller and packet cannon. It can identify and direct incoming data from multiple events on the fly, within nanoseconds, to their designated network paths — which may lead them to computing servers thousands of miles away. These servers will likely finish their work at slightly different rates, so EJFAT will increase or decrease the amount of work it assigns to a server, based on its pending work queue.

ESnet’s 4.8 Tbps load-balancing cluster of 24 FPGAs, located at Berkeley Lab.

EJFAT’s network protocol design is where the true innovation lies. Its packet forwarding protocol performs stateless forwarding: Its FPGAs can forward a given packet without relying on any further context or state, meaning parallel FPGAs can be added for envelope-free scaling.

Need more bandwidth? Add more FPGAs to grow by increments of 2×100 Gbps. Need more compute power? Core compute hosts can be added independently of the number of source DAQs. The design also accommodates a flexible number of CPUs and threads per host, treating each receiving thread as an independent load-balancing destination. (For more details, see the March 2023 paper, “EJFAT Joint ESnet JLab FPGA Accelerated Transport Load Balancer.”

Significantly, the EJFAT prototype may be able to run on a large number of comparable instruments within the DOE complex. The ESnet-Jefferson Lab project team is working with researchers at the Advanced Light Source at Berkeley Laboratory, the Advanced Photon Source at Argonne National Laboratory, and the Facility for Rare Isotope Beams at Michigan State University to develop a software plugin adapter that can be installed in streaming workflows for access to compute resources at NERSC, the Oak Ridge Leadership Computing Facility, and the HPDF testbed.

“EJFAT is a leading example of the strong co-design ethos within ESnet. Our network knowledge, combined with our software and hardware development expertise focused on scientific workflows, can yield flexible prototypes for the Office of Science that accelerate scientific discovery,” said Inder Monga, executive director of ESnet. “We look forward to working closely with the science programs, IRI ecosystem, and HPDF Project to dream, implement, and deploy such innovative solutions widely.”

Jenny DeVasher, Thomas Jefferson National Accelerator Facility, also contributed to this article. Learn more about EJFAT’s design.

About ESnet

The Energy Sciences Network (ESnet) is the U.S. Department of Energy’s high-performance network and user facility, delivering highly reliable data transport capabilities and services optimized for the requirements of large-scale integrated scientific research. Managed by Lawrence Berkeley National Laboratory, ESnet connects the DOE national laboratories, supercomputing facilities, and major scientific instruments, as well as additional research and commercial networks, to enable global collaboration on the world’s biggest scientific challenges.

About Thomas Jefferson National Accelerator Facility

Thomas Jefferson National Accelerator Facility (Jefferson Lab) is a U.S. Department of Energy Office of Science national laboratory. Scientists worldwide utilize the lab’s unique particle accelerator, known as the Continuous Electron Beam Accelerator Facility (CEBAF), to probe the most basic building blocks of matter – helping us to better understand these particles and the forces that bind them – and ultimately our world. In addition, the lab capitalizes on its unique technologies and expertise to perform advanced computing and applied research with industry and university partners, and provides programs designed to help educate the next generation in science and technology. Managing and operating the lab for DOE is Jefferson Science Associates, LLC. JSA is a limited liability company created by Southeastern Universities Research Association.

About NERSC

The National Energy Research Scientific Computing Center (NERSC) is a U.S. Department of Energy Office of Science User Facility that serves as the primary high performance computing center for scientific research sponsored by the Office of Science. Located at Lawrence Berkeley National Laboratory, NERSC serves almost 10,000 scientists researching a wide range of problems in climate, fusion energy, materials science, physics, chemistry, computational biology, and other disciplines.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.