Breakthrough:

Berkeley Lab researchers have developed a domain-aware machine learning framework that predicts how much energy a battery will have left as it ages, using far fewer experiments than traditional methods. By integrating expert knowledge of battery behavior into advanced machine learning techniques such as Gaussian Processes and Bayesian optimization, the team was able to identify and run only the most informative tests from a vast range of possible conditions. This approach delivers accurate predictions (less than 6% error) and significantly reduces the time and resources needed to understand and improve battery performance. The team also proposed an approach to generalize the predictions made from laboratory-based aging experiments to real-world aging conditions. This work was made possible in part by the advanced machine learning expertise and resources provided by the Center for Advanced Mathematics for Energy Research Applications (CAMERA) at Berkeley Lab.

Background:

Traditionally, predicting battery degradation required running extensive experiments for a myriad of combinations of temperature, charge rate, and usage pattern—a slow and costly process. This challenge has limited progress in battery research and slowed the adoption of new technologies. The multidisciplinary Berkeley Lab team overcame this by creating a data-driven framework that actively selects experiments to maximize targeted learning, replacing brute-force testing with an intelligent, efficient approach. This innovation enables researchers to predict battery lifespan accurately and quickly, accelerating the development and deployment of next-generation energy technologies.

Breakdown:

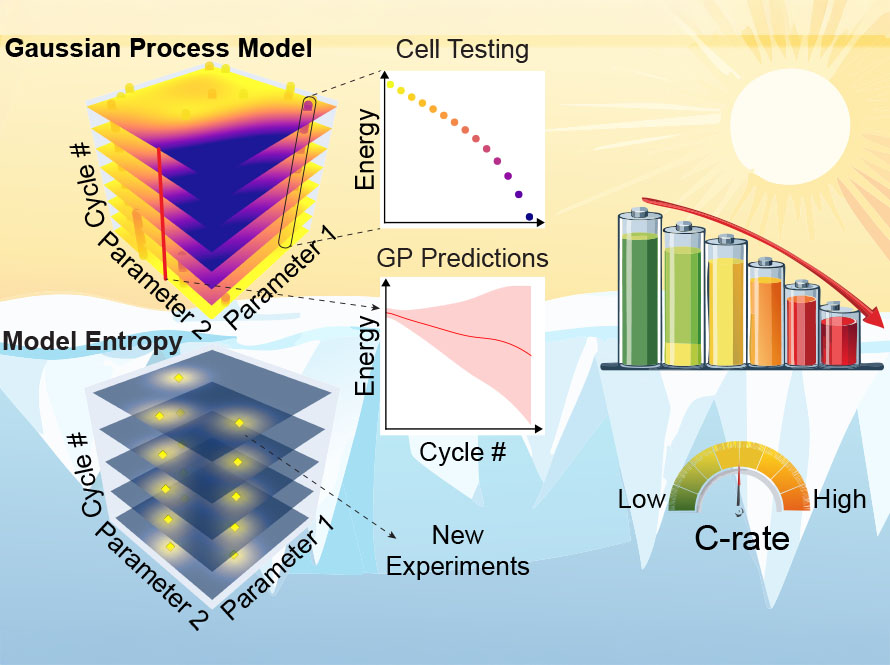

The researchers focused on the three main factors influencing battery aging: state of charge, charge/discharge rate, and temperature. Rather than exhaustively testing every scenario, they used Gaussian Processes to model battery behavior and quantify prediction uncertainty. Bayesian optimization then guided the selection of each new experiment, targeting areas where more data would be most valuable. This active learning strategy allowed them to gather the necessary data with just 48 targeted experiments. Their framework adapts to real-world variability in battery performance and provides confidence estimates for its predictions, making it a powerful tool for accelerating battery innovation and supporting the transition to advanced energy systems. While the current study was limited by factors such as testing only one temperature at a time and not fully incorporating the effects of cycling history, ongoing work aims to address these challenges and further improve prediction accuracy for real-world battery use.

Co-authors:

Maher B. Alghalayini, Daniel Collins-Wildman, Kenneth Higa, Armina Guevara, Vincent Battaglia, Marcus M. Noack, Stephen J. Harris

Publication:

“Machine-learning-based efficient parameter space exploration for energy storage systems,” Cell Reports Physical Science, Volume 6, Issue 4, 102543.

Funding:

Berkeley Lab Laboratory Directed Research and Development Program and CAMERA.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.