UniviStor: Next-Generation Data Storage for Heterogeneous HPC

Optimizing I/O performance is one of the key features of this new scalable metadata service

April 1, 2019

Contact: Kathy Kincade, kkincade@lbl.gov, +1 510 495 2124

The explosive growth in scientific data and data-driven science, combined with emerging exascale architectures, is fueling the need for new data management tools and storage technologies in high performance computing (HPC). Traditional file systems are designed to manage each storage layer separately, putting the burden of moving data across multiple layers on the user. Emerging exascale storage architectures will add new layers of heterogeneous storage devices, making data management across these multiple devices increasingly time-consuming for users.

To address these challenges, the Proactive Data Containers (PDC) project team at Lawrence Berkeley National Laboratory (Berkeley Lab) is developing object-oriented I/O services designed to simplify data movement, data management, and data reading services on next-generation HPC architectures. The latest software tool they have produced is UniviStor (Unified View of Heterogeneous HPC Storage), a data management service that integrates distributed and hierarchical storage devices into a unified view of the storage and memory layers.

The distinct interfaces and data services for each storage layer make data management a complex task, noted Suren Byna, a staff scientist in the Berkeley Lab Computational Research Division’s Scientific Data Management Group who is leading the PDC project team.

“In looking forward to the exascale, one of the major challenges is how storage is becoming multiple layers of heterogeneous devices, and having users manage data movement across all these layers is very inefficient, in terms of performance and scalability,” Byna said. “The goal is to make data management much simpler by letting a service take care of all the intelligence in moving the data around, and object stores are a viable next-generation option for HPC storage to achieve that.”

Integrating Memory and Storage Layers

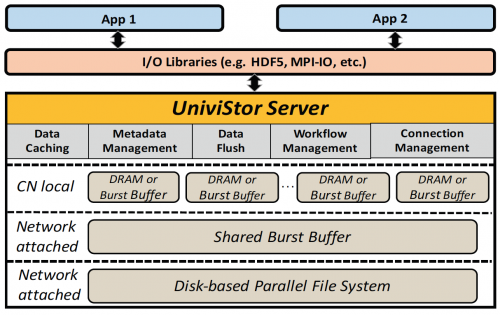

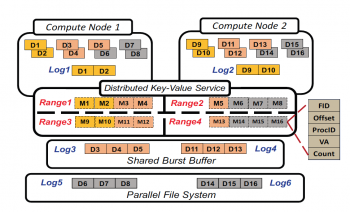

UniviStor is a scalable metadata service that creates a unified storage subsystem view for the user by combining disk-based storage and non-volatile storage devices - such as solid-state drives (SSDs) and storage-class memory that are located on compute nodes and distributed on SSD-based shared storage (burst buffers). It is the next generation of Data Elevator – also developed by the Berkeley Lab PDC team – which moves data vertically between two layers of storage, and BurstFS, which manages distributed memory across compute nodes as a single file system. UniviStor integrates the memory and storage layers that are distributed on compute nodes as well as the storage layers deployed between compute nodes and disk-based storage layers. It also supports in situ data analysis while the data is in memory.

“We have now combined both distributed and vertical memory and storage,” Byna said.

Optimizing I/O performance is one of the key features of UniviStor, which uses existing parallel I/O library APIs such as HDF5 and MPI-IO and requires no application source code changes to implement them. The Berkeley Lab team recently completed an evaluation of three different object stores that are in competition for future HPC storage systems: Lustre, Data Elevator, and UniviStor. In a paper presented at the 2018 IEEE International Conference on Cluster Computing, they reported that UniviStor had achieved 17 times faster I/O performance than Data Elevator in writing and reading plasma physics simulation data files and 46 times faster I/O performance than the state-of-the-art Lustre file system deployed on NERSC’s Cori supercomputer.

In a related paper presented at the IEEE Big Data 2018 conference, the PDC project team described ARCHIE - a method for predicting future data accesses in HDF5 array datasets and prefetching and caching them. This speeds up performance of reading data by five times compared to Lustre and Cray DataWarp file systems on Cori. The group is now integrating the UniviStor and ARCHIE data movement optimization methods into the PDC object-based storage for HPC as well as into Data Elevator for HDF5. The Data Elevator software is available on BitBucket and its development is currently funded by the Exascale Computing Project’s ExaHDF5 project.

“Object stores are a possible path forward for HPC storage at NERSC, and we expect UniviStor to help us with performance on all file systems,” said Quincey Koziol, a principal data architect at NERSC and a co-PI on the PDC project. “UniviStor can provide applications with high-performance, transparent access to a multi-level storage hierarchy.”

In addition to Byna and Koziol, the Berkeley Lab PDC team includes Teng Wang (now with Oracle), Bin Dong, Houjun Tang, Jialin Liu. Other organizations involved in the PDC project include The HDF Group and Argonne National Laboratory.

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube