Crucial Leap in Error Mitigation for Quantum Computers

Advanced Quantum Testbed Experiments Demonstrate Randomized Compiling

December 9, 2021

By Monica Hernandez and William Schulz

Contact: aqt@lbl.gov

AQT researcher Akel Hashim verifying experimental results of randomized compiling (Credit: Christian Jünger/Berkeley Lab)

Researchers at Lawrence Berkeley National Laboratory’s Advanced Quantum Testbed (AQT) demonstrated that an experimental method known as randomized compiling (RC) can dramatically reduce error rates in quantum algorithms and lead to more accurate and stable quantum computations. No longer just a theoretical concept for quantum computing, the multidisciplinary team’s breakthrough experimental results are published in Physical Review X.

The experiments at AQT were performed on a four-qubit superconducting quantum processor. The researchers demonstrated that RC can suppress one of the most severe types of errors in quantum computers: coherent errors.

Akel Hashim, AQT researcher, involved in the experimental breakthrough and a graduate student at the University of California, Berkeley explained: “We can perform quantum computations in this era of noisy intermediate-scale quantum (NISQ) computing, but these are very noisy, prone to errors from many different sources, and don’t last very long due to the decoherence – that is, information loss – of our qubits.”

Coherent errors have no classical computing analog. These types of errors are systematic and result from imperfect control of the qubits on a quantum processor, and can interfere constructively or destructively during a quantum algorithm. As a result, it is extremely difficult to predict their final impact on the performance of an algorithm.

Although, in theory, coherent errors can be corrected or avoided through perfect analog control, they tend to worsen as more qubits are added to a quantum processor due to “crosstalk” among signals meant to control neighboring qubits.

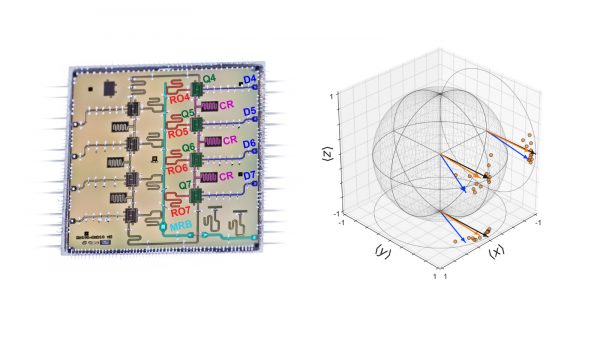

Experimental demonstration of error mitigation through randomized compiling. Left: Eight-qubit superconducting quantum processor. Right: Quantum state tomography of a single qubit with (orange) and without (blue) randomized compiling compared to the ideal (black) state. (Credit: Akel Hashim/Berkeley Lab)

First conceptualized in 2016, the RC protocol does not try to fix or correct coherent errors. Instead, RC mitigates the problem by randomizing the direction in which coherent errors impact qubits, such that they behave as if they are a form of stochastic noise. RC achieves this goal by creating, measuring, and combining the results of many logically-equivalent quantum circuits, thus averaging out the impact that coherent errors can have on any single quantum circuit.

“We know that, on average, stochastic noise will occur consistently at the same average error rate, so we can reliably predict what the results will be from the average error rates. Stochastic noise will never impact our system worse than the average error rate – something that is not true for coherent errors, whose impact on algorithm performance can be orders of magnitude worse than their average error rates would suggest.”

Hashim used the analogy of the signal-to-noise ratio in astronomy to compare the impact of coherent errors versus stochastic noise in quantum computing. The longer a telescope operates, the more the signal will grow with respect to the noise, because the signal will coherently build upon itself, whereas the noise—being incoherent and uncorrelated—will grow much more slowly.

Coherent errors in quantum algorithms can build upon themselves through constructive interference and often grow faster than stochastic noise. However, the experimental demonstration of RC showed that coherent errors in quantum algorithms can be controlled to grow at a much slower rate.

The AQT team collaborated closely with the original creators of the protocol, Joseph Emerson and Joel Wallman, who co-founded the company Quantum Benchmark, Inc. (recently acquired by Keysight Technologies) to tackle the problem of benchmarking and mitigating errors in quantum computing systems.

“Not having to design the software ourselves to perform the RC protocol ultimately saved us a lot of time and resources and freed us to focus on the experimental work,” Hashim said.

By bringing in researchers and partners from across the quantum information science community in the United States and the world, AQT enables the exploration and development of quantum computing based on one of the leading technologies, superconducting circuits.

“RC is a universal protocol for gate-based quantum computing, which is agnostic to specific error models and hardware platforms,” Hashim described. “There are many applications and classes of algorithms out there that may benefit from the RC. Our collaborative research demonstrated that RC works to improve algorithms in the NISQ era, and we expect it will continue to be a useful protocol beyond NISQ. It is important to have this successful demonstration in our toolbox at AQT. We can now deploy it on other testbed user projects.”

Related Stories:

How a Novel Radio Frequency Control System Enhances Quantum Computers

Raising the Bar in Error Characterization for Qutrit-Based Quantum Computing

New CRD Group Enhances Berkeley Lab QIS Research

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube