NERSC Supports First All-GPU Full-Scale Physics Simulation

June 6, 2023

By Elizabeth Ball

Contact: cscomms@lbl.gov

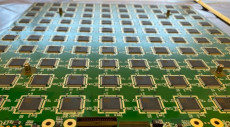

Application-Specific Integrated Circuits (ASICs) are attached to the back of a tile containing 4900 pixel sensors, which record the charges left behind by neutrinos passing through a liquid argon chamber. (Credit: Stefano Roberto Soleti, Berkeley Lab)

Using supercomputers at the National Energy Research Scientific Computing Center (NERSC), researchers have completed a simulation of a detector of neutrino interactions that’s designed to run exclusively on graphics processing units (GPUs) – the first simulation of its kind and an example of using GPUs’ highly parallel structure to process large amounts of physical data. The research was published in the Journal of Instrumentation in April.

Neutrinos are the most abundant particle of matter in the universe. Produced by nuclear reactions like the one that powers the Sun, trillions of them pass through the human body every second, though they typically don’t interact with the human body or any other form of matter. Efforts to measure the mass of the neutrino and understand its relationship to matter in the universe have been underway for decades, and the research outlined in this paper could help clarify why the universe is made of matter and not antimatter (that is, particles with the same mass as matter, but opposite electrical charges and properties).

The simulation is part of the preparation for the Deep Underground Neutrino Experiment (DUNE), an international collaboration studying the neutrino that includes U.S. Department of Energy resources. Currently under construction, the components of the DUNE experiment will consist of an intense neutrino beam produced at Fermilab in Illinois and two main detectors: a Near Detector located near the beam source and a Far Detector located a mile underground at Sanford Underground Research Laboratory in South Dakota. Eventually the Near Detector will detect approximately 50 neutrino interactions per beam blast, adding up to tens of millions of interactions per year. By examining neutrinos and how they change in form over long distances, a process called oscillation, scientists hope to learn about the origin and behavior of the universe over time.

Since 1977, researchers have studied neutrinos using a device called a liquid argon time projection chamber (LArTPC), in which neutrinos flow through liquid argon and leave ions and electrons in their wake, which are received by arrays of sensing wires. With the wires’ position and the charges’ time of arrival for input, the wires produce 2D images of the neutrino interactions. However, a new method being pioneered at Berkeley Lab replaces the wires with sensors known as pixels, which add a third sensing dimension and yield 3D images instead – an increase in information and also a vast amount more data to analyze and store. (In this case a “pixel” is a small sensor, unrelated to the pixels found in consumer electronics screens.) Before construction of the detectors begins, the team uses digital simulations to ensure that both the physical detectors and the workflows around them will work as planned.

“At Berkeley Lab we are developing a new technology that employs pixels instead of wires, so you are able to immediately have a 3D image of your event, and that allows you to have a much better capability to actually reconstruct your interaction,” said co-author Stefano Roberto Soleti. “However, the problem is that now you have a lot of pixels. In the case of the detector that we’re building, we have around 12 million pixels, and we need to simulate that.”

That’s where supercomputing resources at NERSC come in. Because GPUs are uniquely suited to executing many calculations in parallel, they represent a much faster way of dealing with large quantities of data. Perlmutter’s thousands of GPU nodes allowed the researchers to simulate the detector over many nodes at once, greatly increasing the compute capability relative to CPU-only. Soleti’s team saw an associated increase in speed – the simulation of the signal from each pixel took about one millisecond on the GPU compared with ten seconds on a CPU.

“When you have so many channels, it becomes very difficult to simulate them on CPUs or in a classic way,” said Soleti. “Our idea was to use NERSC resources in particular; we started with Cori using test GPU nodes and then moved to Perlmutter to try to develop a physics detector simulation that runs on GPUs. To my knowledge, this is the first full physics detector simulation that runs entirely on GPUs: we do the full simulation from the energy deposit to the signal in our detector entirely on GPUs, so we don't have to copy the data from the GPU memory and then transfer it to the CPU. To my knowledge this is the first full physics detector simulation that does this, and it allowed us to achieve an improvement of around four orders of magnitude in the speed of our simulation. It would've been unfeasible to simulate this detector with a classic technique using CPU algorithms, but with these new methods and with the NERSC resources using the GPU nodes, it is feasible.”

To make it happen, the team ran the simulation using a set of GPU-optimized algorithms written in Python and translated into CUDA, a platform that allows the use of GPUs for general-purpose computing using different programming languages. In this case the team chose Numba to do that translation, which allowed them to interface with CUDA and manage memory without directly writing C++ code. According to Soleti, this method of simulating many sensors in parallel on GPUs is potentially useful for other types of research as well, as long as they lend themselves to running in parallel.

Now that Soleti and his team have a working simulation, what’s next? The simulation is a key step in making DUNE a reality, both digitally and in the real world. A prototype of the DUNE Near Detector is currently being installed in a neutrino beam at Fermilab using the team’s simulation, and will begin operation later this year; installation of the final detector is slated to begin in 2026. But there’s plenty more to do as the whole instrument is constructed: next steps include a simulation for the full detector, which represents a manyfold increase in pixels.

“The next step is to produce the simulation for the full detector,” said Soleti. “Right now the prototype has about one ton of liquid argon and tens of thousands of pixels, but the full detector will have 12 million pixels. So we’ll need to produce a simulation and scale up even more to the level that we’ll need for the full detector.”

Overall, the approach introduced at Berkeley Lab is a major step forward for the DUNE experiment as well as for the field of high-energy physics and other scientific areas of study, said Dan Dwyer, the technical lead for Near Detector work at Berkeley and another author on the paper.

“This novel approach to detector simulation provides a great example of how to leverage modern computing technologies in high-energy physics,” said Dwyer. “It opens new paths to studying the data from DUNE and other future experiments.”

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab’s Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.

Instagram

Instagram YouTube

YouTube