Breakthrough

Berkeley researchers have developed a proven mathematical framework for the compression of large reversible Markov chains—probabilistic models used to describe how systems change over time, such as proteins folding for drug discovery, molecular reactions for materials science, or AI algorithms making decisions—while preserving their output probabilities (likelihoods of events) and spectral properties (key dynamical patterns that govern the system’s long-term behavior).

While describing the dynamics of ubiquitous physical systems, Markov chains also allow for rich theoretical and computational investigation. By exploiting the special mathematical structure behind these dynamics, the researchers’ new theory delivers models that are quicker to compute, equally accurate, and easier to interpret, enabling scientists to efficiently explore and understand complex systems. This advance sets a new benchmark for efficient simulation, opening the door to scientific explorations once thought computationally out of reach.

Background

Markov chains are widely used to model systems that evolve in time with some intrinsic randomness, from the folding of proteins to the spread of disease. But as the number of possible states grows—such as all the shapes a protein might take or all the reaction pathways in a chemical network—these models can become so large that even the most powerful computers struggle to simulate them. Existing simplification methods can speed up computation, but they often distort the system’s essential dynamics, making predictions unreliable. This has limited researchers’ ability to fully explore some of the most complex and important problems in science.

Breakdown

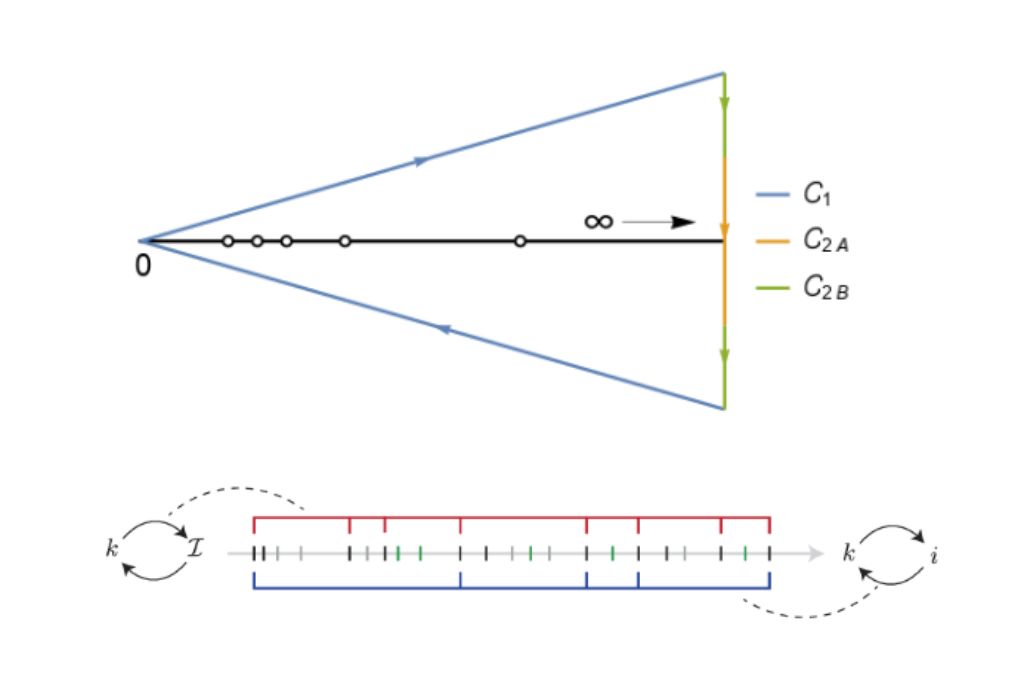

The Berkeley Lab team develops two complementary strategies to shrink large reversible Markov chains while preserving their essential behavior. The first, called projective compression, yields reduced models guaranteed to faithfully mirror the long-time dynamics of the original systems. The second, called structure‑preserving compression, builds a reduced Markov chain that follows the same rules as the original but operates only on a carefully chosen set of key states. Together, both of these approaches capture the system’s critical dynamics in a far more compact form.

To ensure the reduced models remain trustworthy, the team has developed strict and simple accuracy controls. They derived mathematical formulas to measure how closely the compressed version matches the original, relying on heretofore unknown connections between numerical linear algebra, probability theory, and complex analysis. These guarantees extend the applicability of a well-known mathematical technique called the Nyström approximation and use a modern optimization method—known as nuclear maximization—to select the best states to keep in the reduced model. In tests on complex systems, the approach produced models that ran much faster, retained essential dynamics, and were easier to interpret, bringing large-scale simulations within reach.

Co-authors:

Mark Fornace and Michael Lindsey

Publication:

About Computing Sciences at Berkeley Lab

High performance computing plays a critical role in scientific discovery. Researchers increasingly rely on advances in computer science, mathematics, computational science, data science, and large-scale computing and networking to increase our understanding of ourselves, our planet, and our universe. Berkeley Lab's Computing Sciences Area researches, develops, and deploys new foundations, tools, and technologies to meet these needs and to advance research across a broad range of scientific disciplines.