Computing Sciences Summer Program 2024: Talks & Events

Summer Program Kickoff

Who: Ana Kupresanin and Stefan Wild

When: June 4, time 11 a.m. - 12 p.m.

Where: B50 Auditorium

Stefan Wild directs the Applied Mathematics and Computational Research (AMCR) Division in the Computing Sciences Area at Lawrence Berkeley National Laboratory (Berkeley Lab). AMCR conducts research and development in mathematical modeling, simulation and analysis, algorithm design, computer system architecture, and high-performance software implementation. Wild came to Berkeley Lab in December of 2022 from Argonne National Laboratory, where he was a senior computational mathematician and deputy division director of the Mathematics and Computer Science Division.

Ana Kupresanin directs the Scientific Data (SciData) Division in the Computing Sciences Area at Lawrence Berkeley National Laboratory (Berkeley Lab). SciData Division transforms data-driven discovery and understanding through the development and application of novel data science methods, technologies, and infrastructures with scientific partners. SciData builds data infrastructure, emphasizing FAIR data principles and cybersecurity; it integrates machine learning with high performance computing; and fosters partnerships with domain scientists, addressing the challenges of analyzing present and future experimental, observational, and simulation data.

General Tour of Berkeley Lab

Who: Jean Sexton

Who: Jean Sexton

When: June 5, 11 a.m. - 12 p.m.

Where: In-person, RSVP Only

About the event: Take this informative in-person tour, led by Jean Sexton. Explore and learn more about Berkeley Lab's historic campus.

NERSC: Scientific Discovery through Computation

Rebecca Hartman-Baker and Charles Lively

Who: Rebecca Hartman-Baker and Charles Lively

When: June 6, 11 a.m. - 12 p.m.

Where: B59-4102

Abstract: What is High-Performance Computing (and storage!), or HPC? Who uses it and why? We'll talk about these questions as well as what makes a Supercomputer so super and what's so big about scientific Big Data. Finally we'll discuss the challenges facing system designers and application scientists as we move into the exascale era of HPC.

Bio: Rebecca Hartman-Baker leads the User Engagement Group at the National Energy Research Scientific Computing Center (NERSC), where she is responsible for engagement with the NERSC user community to increase user productivity via advocacy, support, training, and the provisioning of usable computing environments. She began her career at Oak Ridge National Laboratory, where she worked as a postdoc and then as a scientific computing liaison in the Oak Ridge Leadership Computing Facility. Before joining NERSC in 2015, she worked at the Pawsey Supercomputing Centre in Australia, where she coached two teams to the Student Cluster Competition at the annual Supercomputing conference, led the HPC training program for a time, and was in charge of the decision-making process for determining the architecture of the petascale supercomputer installed there in 2014. Rebecca earned a Ph.D. in Computer Science from the University of Illinois at Urbana-Champaign.

Bio: Charles Lively III, PhD is a Science Engagement Engineer and HPC Consultant in the User Engagement Group (UEG) at NERSC. Charles earned his Ph.D. from Texas A&M University and his research interests are in the area of Performance Modeling and Optimization of large-scale applications and energy-aware computing.

Panel: Career Paths

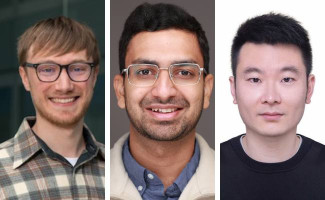

(L-R) Ann Almgren, Sartaj Barveja, and Krti Tallam

Who: Ann Almgren, Sartaj Baveja, and Krti Tallam

When: June 10, 3 - 4 p.m.

Where: B50 Auditorium

Abstract: In this panel, participants in the CS Area Summer Program will have a unique opportunity to get to know how three of our staff members arrived at different levels in their careers. Ann Almgren will share her experiences at the Lab and her path to a leadership position; Sartaj Baveja will tell us about his work as a computer systems engineer; and Krti Tallam will tell us about her journey to Berkeley Lab as a postdoc.

Bio: Ann Almgren is a senior scientist and department head of AMCRD’s AppliedMath Department. Her primary research interest is in computational algorithms for solving PDEs in a variety of application areas. Her current projects include the development and implementation of new multiphysics algorithms in high-resolution adaptive mesh codes that are designed for the latest multi core architectures. She is a SIAM Fellow and serves on the editorial boards ofCAMCoS and IJHPC. Prior to coming to Berkeley Lab she worked at the Institute for Advanced Study in Princeton, NJ, and at Lawrence Livermore National Lab.

Bio: Sartaj Baveja is a Senior Software Engineer at the Energy Sciences Network(ESnet), Lawrence Berkeley National Laboratory. My work involves working on developing tools to support the network. Some of my recent projects involve building a unified my.es.net portal that provides insights into the ESnetnetwork, building Stardust - the network measurement and analysis environment for ESnet as well as working on open source visualization libraries for processing and visualizing network traffic data.

Bio: Krti Tallam is a postdoctoral researcher with the Robust Deep Learning group at the International Computer Science Institute at UC Berkeley and the Scientific Data Division at Lawrence Berkeley National Laboratory. She earned her PhD in computational biology and has since combined her passions for climate science, machine learning, and artificial intelligence in her postdoctoral work. Krti's research focuses on generative artificial intelligence and AI security for scientific data, with a particular emphasis on developing and interrogating hidden watermarking techniques for data authentication and robustness in scientific research.

Overview of Research and Developments in Scalable Solvers Group

Xiaoye (Sherry) Li

Who: Xiaoye (Sherry) Li

When: June 11, 11 a.m. - 12 p.m.

Where: B59-4102

Abstract: We will highlight various ongoing projects in SSG. They span broad areas of numerical linear algebra, high performance scientific computing, and AI/ML. The group members are also actively engaged in many application areas, such as materials sciences, computational chemistry, plasma fusion energy, and quantum computing.

Bio: Sherry Li is a Senior Scientist in the Applied Mathematics and Computational Research Division, Lawrence Berkeley National Laboratory. She has worked on diverse problems in high-performance scientific computations, including parallel computing, sparse matrix computations, high precision arithmetic, and combinatorial scientific computing. She is the lead developer of SuperLU, a widely-used sparse direct solver, and has contributed to the development of several other mathematical libraries, including ARPREC, LAPACK, PDSLin, STRUMPACK, and XBLAS. She earned a Ph.D. in Computer Science from UC Berkeley and B.S. in Computer Science from Tsinghua Univ. in China. She has served on the editorial boards of the SIAM J. Scientific Comput. and ACM Trans. Math. Software, as well as many program committees of the scientific conferences. She is a SIAM Fellow and an ACM Senior Member.

NERSC: New User Training (2-Day Training)

Who: Rebecca Hartman-Baker, Charles Lively, and Lipi Gupta

Who: Rebecca Hartman-Baker, Charles Lively, and Lipi Gupta

Session 1 of 2: June 12, 9 a.m. - 4 p.m

Session 1 of 2: June 13, 9 a.m. - 4 p.m.

Where: Zoom Only

Please register for this event ahead of time.

NERSC will be hosting a New User Training and Using Perlmutter refresher virtual training event over two half-days on Wednesday and Thursday, June 12-13, 2024 The goal of this training is to introduce new users to NERSC and to also provide current NERSC users with a review of using Perlmutter, such as how to build, execute, and submit jobs on Perlmutter and available tools. All NERSC users are invited and encouraged to attend this review of best practices for using Perlmutter with hands-on exercises. Topics covered include Computational systems, Accounts and allocations, Programming environment, Running jobs, Tools, and best practices, and the NERSC data ecosystem.

VIEW VIDEO & ALL PROGRAM MATERIALS AT NERSC.gov

Literature Surveys and Reviews: Where do we stand?

Jean Luca Bez

Who: Jean Luca Bez

When: June 13, 2 p.m. - 3 p.m.

Where: B59-4102

Abstract: Newton himself once stated: "If I have seen further, it is by standing on the shoulders of Giants." The research we conduct always builds upon previous contributions seeking to create something new and valuable to society. Its usefulness is measured in comparison with earlier research in the area. Navigating the myriad of research papers from journals to conferences can be daunting. In this talk, we will cover some strategies and tips for surveying the existing literature to build new contributions and comparing your work with what other researchers are proposing.

Bio: Jean Luca is a Career-Track Data Management Research Scientist at Lawrence Berkeley National Laboratory (LBNL), USA. He is passionate about High-Performance I/O, Parallel I/O, Education, and Competitive Programming. His research focuses on optimizing the I/O performance of scientific applications by exploring access patterns, automatic tuning, and reconfiguration using machine learning techniques, I/O forwarding, I/O scheduling, and novel storage solutions.

Designing and Presenting a Science Poster

Jonathan Carter

Who: Jonathan Carter

When: June 18, 11 a.m. – 12 p.m.

Where: B59-3101

Abstract: During the poster session on August 6th, members of our summer visitor program will get the opportunity to showcase the work and research they have been doing this summer. Perhaps some of you have presented posters before, perhaps not. This talk will cover the basics of poster presentation: designing an attractive format; how to present your information clearly; what to include and what not to include. Presenting a poster is different from writing a report or giving a presentation. This talk will cover the differences, and suggest ways to avoid common pitfalls and make poster sessions work more effectively for you.

Bio: Jonathan Carter is the Associate Laboratory Director for Computing Sciences at Lawrence Berkeley National Laboratory (Berkeley Lab). The Computing Sciences Area at Berkeley Lab encompasses the National Energy Research Scientific Computing Division (NERSC), the Scientific Networking Division (home to the Energy Sciences Network, ESnet) and the Computational Research Division.

Scientific Data Collaborations: Team Science through Data and Software

Shreyas Cholia

Who: Shreyas Cholia

When: June 20, 11 a.m. - 12 p.m.

Where: B59-3101

Abstract: The Scientific Data Division (SciData) at Lawrence Berkeley National Laboratory plays a pivotal role in transforming data-driven discovery and understanding. The SciData division is engaged in developing and applying innovative data science methods, technologies, and infrastructures, closely collaborating with scientific partners to tackle data driven scientific challenges. This talk will highlight SciData's pivotal role in developing and deploying software solutions and data services that support the complex demands of multidisciplinary scientific collaboration with an emphasis on sustainable software and data engineering practices. These systems are designed to support a wide range of activities, including data management, AI/ML, data analysis, visualization, FAIR data, user interface research, modeling, and scalable computing. We will highlight collaborations across a wide-range of scientific domains, ranging from Earth and environmental sciences and biology to materials science and chemical engineering. Join us to discover how the Scientific Data Division at LBL is driving the future of collaborative research by developing the software and data infrastructures that underpin successful team science.

Bio: Shreyas Cholia is Group Leader for Integrated Data Systems Group, focused on the data engineering and integration aspects of computational and data analysis systems. He is particularly interested in the interactive aspects of scientific computing at scale. He has extensive experience leading teams and building data systems for scientific projects, including ESS-DIVE, NMDC and the Materials Project. He also worked for over a decade at NERSC, where he led the science gateway and grid computing efforts. Prior to his appointment at LBL, he was a developer and consultant at IBM. He has a Bachelor’s Degree from Rice University, where he double majored in Computer Science and Cognitive Sciences.

Modeling Antarctic Ice with Adaptive Mesh Refinement

Dan Martin

Who: Dan Martin

When: June 25, 11 a.m. – 12 p.m.

Where: B59-3101

Abstract: The response of the Antarctic Ice Sheet (AIS) remains the largest uncertainty in projections of sea-level rise. The AIS (particularly in West Antarctica) is believed to be vulnerable to collapse driven by warm-water incursion under ice shelves, which causes a loss of buttressing, subsequent grounding-line retreat, and large(potentially up to 4m) contributions to sea-level rise. Understanding the response of the Earth's ice sheets to force from a changing climate has required the development of a new generation of next-generation ice sheet models which are much more accurate, scalable, and sophisticated than their predecessors. For example very fine (finer than 1km) spatial resolution is needed to resolve ice dynamics around shear margins and grounding lines (the point at which grounded ice begins to float). The LBL-developed BISICLES ice-sheet model uses adaptive mesh refinement (AMR) to enable sufficiently-resolved modeling of full-continent Antarctic ice sheet response to climate forcing. This talk will discuss recent progress and challenges modeling the sometimes-dramatic response of the ice sheet to climate forcing using AMR.

Bio: Dan Martin is a computational scientist and group leader for the Applied Numerical Algorithms Group at Lawrence Berkeley National Laboratory. After earning his Ph.D. in mechanical engineering from U.C. Berkeley, Dan joined ANAG and Berkeley Lab as a post-doc in 1998. He has published in a broad range of application areas including projection methods for incompressible flow, adaptive methods for MHD, phase-field dynamics in materials, and Ice sheet modeling. His research involves the development of algorithms and software for solving systems of PDEs using adaptive mesh refinement (AMR) finite volume schemes, high (4th)-order finite volume schemes for conservation laws on mapped meshes, and Chombo development and support. Current applications of interest are developing the BISICLES AMR ice sheet model as a part of the SCIDAC-funded ProSPect application partnership, and some development work related to the COGENT gyrokinetic modeling code, which is being developed in partnership with Lawrence Livermore National Laboratory as a part of the Edge Simulation Laboratory (ESL) collaboration.

An Introduction to Quantum Networks and the QUANT-NET Project

Wenji Wu

Who: Wenji Wu

When: June 27, 11 a.m. – 12 p.m.

Where: B59-3101

Abstract: Quantum networks may provide new capabilities for information processing and transport, potentially transformative for science, economy and natural science uses. These capabilities, provably impossible for existing “classical” physics based networking technologies, are of key interest to many U.S. Department of Energy (DOE) mission areas, such as climate and Earth system science, astronomy, materials discovery, and life sciences, etc.

Bio: Quantum networks may provide new capabilities for information processing and transport, potentially transformative for science, economy and natural science uses. These capabilities, provably impossible for existing “classical” physics based networking technologies, are of key interest to many U.S. Department of Energy (DOE) mission areas, such as climate and Earth system science, astronomy, materials discovery, and life sciences, etc.

NERSC Training Event: Crash Course in Supercomputing

Who: NERSC Staff

Who: NERSC Staff

When: June 28, 9 a.m. - 4 p.m.

Where: B77, Room 3377

Please register for this event ahead of time.

This hybrid training is also open to NERSC, OLCF, and ALCF users. The training is geared towards novice parallel programmers. In this course, students will learn to write parallel programs that can be run on a supercomputer. We begin by discussing the concepts of parallelization before introducing MPI and OpenMP, the two leading parallel programming libraries. Finally, the students will put together all the concepts from the class by programming, compiling, and running a parallel code on one of the NERSC supercomputers. Training accounts will be provided for students who have not yet set up a NERSC account.

VIEW VIDEO & ALL PROGRAM MATERIALS AT NERSC.gov

Performance Study of High-Level Programming Languages for Scientific Computing

Per-Olof Persson

Who: Per-Olaf Persson

When: July 2, 11 a.m. - 12 p.m.

Where: B59-3101

Abstract: This talk provides an informal overview of three widely used high-level programming languages for scientific computing: MATLAB, Python, and Julia. It examines their similarities and differences, with a particular emphasis on performance optimization techniques. Through live demonstrations, the talk explores methods such as type stability, vectorization, pass-by-reference, array views, static arrays, and integration with C++/Fortran codes, illustrating that in many cases, near-optimal speed can be achieved. Examples will include typical algorithms from numerical computations, such as the explicit integration of initial value problems and the assembly of finite element stiffness matrices.

Bio: Per-Olof Persson is since 2008 a Professor of Mathematics at the University of California, Berkeley, and a Mathematician Faculty Scientist/Engineer at the Berkeley Lab. Before then, he was an Instructor of Applied Mathematics at the Massachusetts Institute of Technology, from where he also received his PhD in 2005. In his thesis, Persson developed the DistMesh algorithm which is now a widely used unstructured meshing technique for implicit geometries and deforming domains. He has also worked for several years with the development of commercial numerical software, in the finite element package Comsol Multiphysics. His current research interests are in high-order discontinuous Galerkin methods for computational fluid and solid mechanics. He has developed new efficient numerical discretizations, scalable parallel preconditioners and nonlinear solvers, space-time and curved mesh generators, adjoint formulations for optimization, and IMEX schemes for high-order partitioned multiphysics solvers. He has applied his methods to important real-world problems such as the simulation of turbulent flow problems in flapping flight and vertical axis wind-turbines, quality factor predictions for micromechanical resonators, and noise prediction for aeroacoustic phenomena.

SLIDES NOT AVAILABLE

QC and HPC: the Path from the Experimental to Routine Computing

Anastasiia Butko

Who: Anastasiia Butko

When: July 9, 11 a.m. - 12 p.m.

Where: B50 Auditorium

Abstract: Quantum computing is a promising new technologies that can potentially outperform classical machines for various scientific applications. Quantum technologies have dramatically evolved over the past decade, yet it still stays in the area of experimental computing making it challenging for users to adapt for their applications. Moreover, it is unclear how and when quantum accelerators become a part of the larger heterogeneous HPC ecosystem. In this talk, we discuss the path for quantum computing the experimental to routine computing.

Bio: Anastasiia Butko, Ph.D. is a Research Scientist in the Computational Research Division at Lawrence Berkeley National Laboratory (LBNL), CA. Her research interests lie in the general area of computer architecture, with particular emphasis on high-performance computing, emerging and heterogeneous technologies, associated parallel programming and architectural simulation techniques. Broadly, her research addresses the question of how alternative technologies can provide continuing performance scaling in the approaching Post-Moore’s Law era. Her primary research projects include development of the EDA tools for fast superconducting logic design, development of the classical ISA for quantum processor control, development of the fast and flexible System-on-Chip generators using Chisel DSL. Dr. Butko received her Ph.D. in Microelectronics from the University of Montpellier, France (2015). Her doctoral thesis developed fast and accurate simulation techniques for many-core architectures exploration. Her graduate work has been conducted within the European project MontBlanc, which aims to design a new supercomputer architecture using low-power embedded technologies. Dr. Butko received her MSc. Degree in Microelectronics from UM2, France and MSc and BSc Degrees in Digital Electronics from NTUU "KPI", Ukraine. During her Master she participated on the international program of double diploma between Montpellier and Kiev universities.

Simulating Pulsars and Oceans Across Scales

Hannah Klion

Who: Hannah Klion

When: July 11, 11 a.m. - 12 p.m.

Where: B50 Auditorium

Abstract: In many astrophysical and geophysical systems, small-scale processes drive global dynamics. The naive approach to simulating these systems – resolving small-scale processes in the entire large domain – can be computationally infeasible. I will discuss my work toward high-accuracy, multi-scale simulations of two such systems: the environments around pulsars and Earth’s oceans. Relativistic magnetic reconnection is a source of non-thermal particle acceleration in many high-energy, plasma-dominated, astrophysical systems, such as pulsars and black holes. However, it operates at spatial scales millions of times smaller than the pulsar itself, so fully capturing plasma processes in an unscaled global domain is not feasible. To complement scaled global simulations, I have focused on small box simulations of reconnection where the processes are accurately captured. However, these are still computationally expensive. I will discuss my work using the GPU-accelerated PIC code WarpX to explore the accuracy and potential performance benefits of advanced computational techniques for global pulsar and local reconnection simulations. I will also discuss my work on REMORA, a new performance-portable regional ocean model. Small-scale (<1km) processes are expected to be important to global oceanic flows, but cannot be captured in global ocean simulations. REMORA will couple to global ocean simulations in order to add refinement in regions of particular interest. Its capabilities, such as mesh refinement and tracer particles, also make it a flexible standalone tool for the study of hydrological processes. I will discuss progress toward these goals as well as future directions.

Bio:Hannah Klion is a computational research scientist in the Center for Computational Science and Engineering. Her research focuses on developing high-performance, multi-scale simulations of oceanic and astrophysical systems. She earned her BS in Physics from Caltech (2015) and her PhD in Physics from UC Berkeley in 2021. At UC Berkeley, she was a Department of Energy Computational Science Graduate Fellow and a UC Berkeley Physics Theory Fellow.

LaTex Workshop

Lipi Gupta

Who: Lipi Gupta

When: July 12, 11 a.m. - 12 p.m.

Where: Zoom Only

Abstract: For participants: This is an interactive activity, and it is encouraged that anyone participating have access to a LaTeX compiler.

Bio: Lipi Gupta defended her Ph. D. in Physics at the University of Chicago in July 2021. She was awarded an Office of Science Graduate Student Research Program award from the US Department of Energy to complete her Ph.D research at the SLAC National Accelerator Lab in Menlo Park, California. At SLAC, Lipi worked on studying how to apply machine learning techniques to improve particle accelerator operation and control. Lipi also has a background in nonlinear beam dynamics, focusing on sextupole magnet resonance elimination, through her research at University of Chicago and research conducted while earning a Bachelor of Arts in Physics with a minor in mathematics at Cornell University.

Roofline Variants to Understand Workloads and System Performance

Nan Ding

Who: Nan Ding

When: July 16, 11 a.m. - 12 p.m.

Where: B59-3101

Abstract: Classic FLOP-centric roofline is a popular performance model for determining whether a workflow is compute-bound or memory-bound. However, it is inappropriate for emerging workloads that perform more integer operations than floating point operations or are messaging-heavy, as well as workflows defined as the flow of applications that need to be executed on HPC resources. To that end, we have a list of roofline variants: Instruction GPU Roofline, Message Roofline, and Workflow Roofline to reason the performance in different aspects.

Bio: Nan Ding, Ph.D., is a Research Scientist in the Applied Mathematics and Computational Research Division (AMCR) at Lawrence Berkeley National Laboratory (LBNL), CA. Her research interests include high-performance computing, performance modeling, and computer architecture. Broadly, she develops performance models to understand the inherent bottlenecks in today's systems and predict the performance and bottlenecks of tomorrow's exascale systems. Moreover, her research focuses on developing technologies and algorithms that enhance the performance, scalability, and energy efficiency of applications running on the Department of Energy's multicore-, manycore-, and accelerator-based supercomputers. Dr. Ding received her Ph.D. in computer science from Tsinghua University, China (2018).

Simulations and Modeling in the Era of Precision Cosmology

Zarija Lukić

Who: Zarija Lukić

When: July 18, 11 a.m. - 12 p.m.

Where: B59-3101

Abstract: Ongoing and future cosmology experiments are poised to tackle some of the most profound questions in fundamental science. These questions include explaining the nature of dark energy and dark matter, and testing particle physics and the theory of gravity on the largest observable scales in the universe. However, interpreting the results of these experiments, which span measurements across multiple temporal epochs and length scales, presents a formidable challenge. It involves solving an inverse problem: deducing the underlying physics from the acquired observational data. Numerical simulations of the universe provide the most direct approach to addressing this challenge. To rise to this challenge we have developed the Nyx code, demonstrating remarkable efficiency and scalability. Yet despite such computational successes, creating "virtual universes" remains extremely expensive, especially when a high fidelity is required. Consequently, our research extends to developing emulators—approximators constructed from a finite set of full-physics simulations as well as combining physical simulations with generative ML models. These serve as cost-effective surrogates for expensive simulations, allowing us to model scales which would otherwise be out of reach. In this talk I will review the ongoing simulations and modeling effort in the Computational Cosmology Center.

Bio: Zarija Lukić is a staff scientist and a group lead of the Computational Cosmology Center. He earned his PhD in astrophysics from the University of Illinois at Urbana-Champaign and has been a postdoctoral researcher in the Theoretical Division of Los Alamos National Laboratory and the Computational Research Division of Lawrence Berkeley Lab. The main topic of Zarija's research is the large-scale structure of the Universe, with the focus on numerical simulations and ML/AI generative methods. An essential component of this research is developing new computational methods for modeling and interpreting physical systems: building simulation codes that can efficiently run on the largest supercomputers, as well as methods for extracting scientific insights using simulation models and observational data from sky surveys. Zarija is currently the P.I. on the LDRD project "Developing the next-generation of mock skies for cosmology" and the LBL P.I. on SciDAC-5 project "Enabling Cosmic Discoveries in the Exascale Era". Over the past 20+ years, Zarija was the lead on many computational projects using world-leading supercomputers, including those at NERSC, the National Center for Supercomputing Applications (NCSA), the Los Alamos Supercomputer Center, the Oak Ridge Leadership Computing Facility (OLCF), and the Argonne Leadership Computing Facility (ALCF).

Scientific Applications in the NESAP Program

Andrew Naylor, Mukul Dave, and Wenbin Xu

Who: Andrew Naylor, Mukul Dave, and Wenbin Xu

When: July 23, 11 a.m. – 12 p.m.

Where: B59-3101

Andrew Naylor: As scientific experiments are creating larger and more complex datasets, there is an ever increasing need to accelerate scientific workflows. Recent advancements in machine learning (ML) algorithms, coupled with the power of cutting-edge GPUs, have provided significant performance gains. However, further optimisation of computational efficiency is crucial to minimise processing latency and resource requirements. The physics community is exploring ML inference-as-a-service (IaaS) to utilise hardware more efficiently. This talk will introduce ML IaaS and explore two NESAP projects employing IaaS on the NERSC Perlmutter supercomputer.

Andrew Naylor is a NERSC NESAP Postdoctoral Fellow specializing in integrating AI into scientific workflows for high-performance computing (HPC) and cloud environments. He has collaborated with the ATLAS and CMS experiments focusing on implementing and optimising machine learning inference-as-a-service for physics analysis. Currently, he is working with CMS to explore efficient GPU utilisation with the SONIC framework on the NERSC Perlmutter supercomputer. Andrew received his Ph.D. in experimental particle physics from the University of Sheffield in 2022, where his research involved simulating and analyzing background sources in the LUX and LZ dark matter experiments.

Mukul Dave: Wind farm simulations require data from mesoscale atmospheric simulations as initial and boundary conditions for the microscale turbine environments. The Energy Research and Forecasting (ERF) code bridges this scale gap and provides an efficient GPU-enabled parallel implementation with adaptive mesh refinement through the underlying AMReX framework. I will present my takeaways from optimizing the performance of ERF and outline strategies that reduce the cost of communication among parallel processes. I will also describe my current efforts for coupling ERF with a turbine solver, which will enable more seamless wind farm simulations.

As a NESAP for Simulation postdoc at NERSC, Mukul Dave contributes to research software development, enabling and porting codes to make use of exascale computing platforms involving the latest accelerators. Mukul completed an undergraduate program in Mechanical Engineering (ME) from Nirma University in his hometown of Ahmedabad, Gujarat, India in 2014, followed by a master's in ME from Arizona State University in 2016 with a focus on high performance computing, numerical methods, and fluid-thermal sciences. He worked as an HPC Application Specialist at the Center for Computation and Visualization at Brown University from 2016-18, helping researchers use the shared compute cluster. Mukul's doctoral research from 2018-22 at the University of Wisconsin-Madison involved simulating cross-flow turbines that rotate on an axis perpendicular to the flow to harvest energy from flowing wind or water.

Wenbin Xu: Discovering novel materials and exploring chemical reaction networks are central tasks in modern chemistry research. Atomic modeling based on quantum chemistry calculations, particularly Density Functional Theory (DFT), provides valuable microscopic insights for experiments. However, these calculations are prohibitively computationally expensive for exploring vast chemical design spaces. Machine learning, especially Graph Neural Networks (GNNs), has shifted research paradigms by enabling atomic simulations at unprecedented time and length scales. In this talk, I will present my current efforts to use graph neural networks to expedite the discovery of magnetic materials and the construction of complex reaction networks.

Wenbin Xu is a NESAP for learning postdoc fellow at NERSC with research interests in AI for chemistry and catalysis. His current research focuses on leveraging large-scale deep-learning techniques to explore chemical reaction networks and discover high-performing catalysts. He collaborates with domain scientists from The Center for High Precision Patterning Science at Lawrence Berkeley Lab and the Open Catalyst Project team at Carnegie Mellon University and Meta AI. Prior to joining NERSC, Wenbin Xu received his Ph.D. in computational chemistry from the Technical University of Munich and the Fritz Haber Institute of the Max Planck Society in 2022. His doctoral research focused on developing physics-inspired machine-learning models for heterogeneous catalyst discovery.

AI and the Future of Precision Medicine

Silvia Crivelli

Who: Silvia Crivelli

When: July 25 (CANCELLED)

Abstract: Advances in AI and HPC combined with the access to 1) a vast, private multimodal dataset containing electronic health records from millions of patients over a period of over 23 years, and 2) multiple, publicly available databases containing hundreds of social and environmental determinants of health, has allowed us to advance precision medicine. We are developing predictive models for outcomes such as suicide risk, obstructive sleep apnea and lung cancer. These models allow for a wholistic representation of the state of a patient, from their vital signs and medications, to their housing stability and social connections, to the unemployment rate, air quality and heat waves of the place where they live. In this talk I will discuss how an interagency agreement between the Department of Energy (DOE) and the Department of Veterans Affairs (VA) has made this possible by allowing teams of computational scientists at various DOE labs to get access to the largest healthcare dataset in the country and to apply data science and AI technologies at large scale. Also, the agreement has promoted a co-design, team-science approach between DOE labs researchers and VA physicians representing a wide range of specialties and healthcare systems. This approach makes sure that the models have operational use and can be adopted to save lives and make an efficient distribution of the resources.

Bio: Dr. Crivelli has conducted research at the intersection of science, high-performance computing, human-computer interaction, and applied mathematics for more than twenty-five years. Her research has focused on two main goals: 1) to bring scientists together, both seasoned and young and from all walks of science, to tackle long-standing, extremely hard, and multidisciplinary problems and 2) to develop methods and software tools that empower physicians and researchers to predict the behavior of biological systems and, more recently, healthcare outcomes. Her interest in developing AI technologies for scientific research and for societal benefit resulted in projects tackling a wide range of topics, which include the development of protein structure prediction methods, the creation of innovative software tools for protein and drug design, and the development of predictive models to decrease the number of deaths due to suicide and overdose. Her favorite professional activity is to mentor students. She has tirelessly worked on the mission to diversify the workforce to include more women and people from underrepresented groups. She believes that progress in science will come from the rich combination of ideas that only a highly diverse community can create. She earned a Ph.D. in Computer Science from the University of Colorado, Boulder, and a M.S in Applied Mathematics from the Universidad Nacional del Litoral, Argentina. She was a postdoctoral fellow at the University of California, Berkeley and the Lawrence Berkeley National Laboratory (LBNL).

Foundational Methods for Foundational Models for Scientific Machine Learning

Michael Mahoney

Who: Michael Mahoney

When: July 30, 11 a.m. - 12 p.m.

Where: B59-3101

Abstract: The response of the Antarctic Ice Sheet (AIS) remains the largest uncertainty in projections of sea-level rise. The AIS (particularly in West Antarctica) is believed to be vulnerable to collapse driven by warm-water incursion under ice shelves, which causes a loss of buttressing, subsequent grounding-line retreat, and large(potentially up to 4m) contributions to sea-level rise. Understanding the response of the Earth's ice sheets to force from a changing climate has required the development of a new generation of next-generation ice sheet models which are much more accurate, scalable, and sophisticated than their predecessors. For example very fine (finer than 1km) spatial resolution is needed to resolve ice dynamics around shear margins and grounding lines (the point at which grounded ice begins to float). The LBL-developed BISICLES ice-sheet model uses adaptive mesh refinement (AMR) to enable sufficiently-resolved modeling of full-continent Antarctic ice sheet response to climate forcing. This talk will discuss recent progress and challenges modeling the sometimes-dramatic response of the ice sheet to climate forcing using AMR.

Bio: Michael W. Mahoney is at the University of California at Berkeley in the Department of Statistics and at the International Computer Science Institute (ICSI). He is also an Amazon Scholar as well as head of the Machine Learning and Analytics Group at the Lawrence Berkeley National Laboratory. He works on algorithmic and statistical aspects of modern large-scale data analysis. Much of his recent research has focused on large-scale machine learning, including randomized matrix algorithms and randomized numerical linear algebra, scalable stochastic optimization, geometric network analysis tools for structure extraction in large informatics graphs, scalable implicit regularization methods, computational methods for neural network analysis, physics informed machine learning, and applications in genetics, astronomy, medical imaging, social network analysis, and internet data analysis. He received his PhD from Yale University with a dissertation in computational statistical mechanics, and he has worked and taught at Yale University in the mathematics department, at Yahoo Research, and at Stanford University in the mathematics department. Among other things, he was on the national advisory committee of the Statistical and Applied Mathematical Sciences Institute (SAMSI), he was on the National Research Council's Committee on the Analysis of Massive Data, he co-organized the Simons Institute's fall 2013 and 2018 programs on the foundations of data science, he ran the Park City Mathematics Institute's 2016 PCMI Summer Session on The Mathematics of Data, he ran the biennial MMDS Workshops on Algorithms for Modern Massive Data Sets, and he was the Director of the NSF/TRIPODS-funded FODA (Foundations of Data Analysis) Institute at UC Berkeley.

Behavioral-Based Interviewing Workshop: Effective Interviewing Techniques

Bill Cannan and Nicolette Dunston

Who: Bill Cannan and Nicolette Dunston

When: August 1, 11 a.m. – 12 p.m.

Where: B59-3101

Abstract: Past Behavior is the best predictor of future performance! Behavioral-based interviewing is a competency-based interviewing technique in which employers evaluate a candidate's past behavior in different situations in order to predict their future performance. This technique is the new norm for academic and industry-based organizations searching for talent. This workshop will provide information and tools to help you prepare for your next interview including an overview of the behavioral-based interview process, sample questions, and techniques on how to prepare.

Bill Cannan is the Principal HR Division Partner that supports Computing Sciences and IT. Bill has over 20 years of HR related experience as a recruiter and HR Generalist in both industry and National Lab environments. This includes over 12 years at Berkeley Lab and three years with Lawrence Livermore National Lab. Bill is responsible for providing both strategic and hands-on full cycle Human Resources support and consultation to employees and managers.

Nicolette Carroll is a Staff HR Division Partner who supports Computing Sciences and IT.

Summer Program Poster Session

Who: All Summer Program Participants are Welcome to Attend

When: August 6, 11 a.m. - 12 p.m.

Where: B59-3101

Instagram

Instagram YouTube

YouTube