New Methods

Technological developments in recent decades make it possible for us to measure, sense, and monitor the world in unprecedented detail while generating unimaginably large quantities of data at record-breaking rates. While industrial needs have been an important driver for developing new machine learning methods to deal with the data glut, scientific data (and the goals of science) differ fundamentally. Machine learning methods developed for the Internet and social media can’t deliver on the promise of scientific machine learning. Berkeley Lab researchers are developing a new generation of algorithmic and statistical tools designed to be broadly applicable to large-scale data acquisition across a diverse range of scientific domains. We are also tackling the considerable challenges and opportunities for interdisciplinary research at the interface between computer science, statistics, and applied mathematics.

Projects

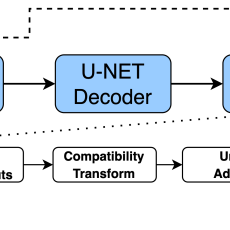

Adaptable Deep Learning and Probabilistic Graphical Model System for Semantic Segmentation

Semantic segmentation algorithms based on deep learning architectures have been applied to a diverse set of problems. Consequently, new methodologies have emerged to push the state-of-the-art in this field forward, and the need for powerful, user-friendly software increased significantly. We introduce a new encoder-decoder system that enables adaptability for complex scientific datasets and model scalability by combining convolutional neural networks (CNN) and conditional random fields (CRF) in an end-to-end training approach. The full integration of CNNs with CRFs enables more efficient training and more accurate segmentation results, in addition to learning representations of the data. Moreover, the CRF model enables the use of prior knowledge from scientific datasets that can be used for better explainability, interpretability, and uncertainty quantification. Contacts: Talita Perciano, Matthew Avaylon

Union of Intersections

Parametric models are ubiquitous in science, and the inferred parameters of those models fit to data can be used to gain insight into the underlying data generation process. Union of Intersections is a novel statistical-machine learning framework that enables improved feature-selection and estimation capabilities in diverse parametric models fit to data. Together, these enhanced inference capabilities lead to improved predictive performance and model interpretability. Contact: Kristofer Bouchard

Uncovering Dynamics

Natural systems are dynamic systems. Often, scientists want to uncover dynamic low-dimensional latent structures embedded in high-dimensional noisy observations. However, most unsupervised learning methods are focused on capturing variance, which may not be related to dynamics. We have developed novel dimensionality-reduction methods that optimize an objective function directly designed for dynamic data (predictive information between the past-future of a time series). We have created two methods based on maximizing predictive information: a linear dimensionality reduction method (Dynamical Components Analysis, or DCA) and non-linear Compressed Predictive Information Coding (CPIC). Contact: Kristofer Bouchard

Topological Optimization

Optimization, a key tool in machine learning and statistics, relies on regularization to reduce overfitting. Traditional regularization methods control a norm of the solution to ensure its smoothness. We propose a method that instead builds on insights from topological data analysis. This approach enables a faster and more precise topological regularization, the benefits of which we illustrate with experimental evidence. Furthermore, we extend our method to more general topological losses. Contact: Dmitriy Morozov

ROI HIde-and-Seek

We created the region of interest (ROI) Hide-and-seek protocol, an algorithm for verifying an arbitrary image classification task. It hides the ROI, in this case, an X-ray image of the lung, and classifies the image as pneumonia, normal, or COVID-19. What was surprising in this test is that the performance remained high even though important parts of the image were removed. Results showed that naïve interpretations from potentially biased data sources could lead to false COVID-19 diagnostics as the actual lungs were removed from the input. This work raises awareness of the role of different data sources and accuracy metrics used in the current DL classification of lung imaging. Contact: Dani Ushizima

Statistical Mechanics for Interpretable Learning Algorithms

Machine learning has the potential to revolutionize scientific discovery, but it also has some limitations. One of them is interpretability. As machine learning networks grow larger and more complex, understanding how the networks behave and how they reach the results is difficult, if not impossible. This project is using statistical mechanics to interpret how popular machine learning algorithms behave, give users more control over these systems, and enable them to reach the results faster. As a proof of concept, this project is working closely with Berkeley Lab’s Distributed Acoustic Sensing project. Another machine learning challenge is that data is the only guide for these tools. One way to improve interpretability for scientific applications is to integrate the laws of physics into the learning process. This project will also explore machine learning algorithms informed by physics. Contacts: Michael Mahoney, John Wu, Jonathan Ajo-Franklin

The Chemical Universe through the Eyes of Generative Adversarial Neural Networks

This project is developing generative machine learning models that can discover new scientific knowledge about molecular interactions and structure-function relationships in chemical sciences. The aim is to create a deep learning network that can predict properties from structural information but can also tackle the “inverse problem,” that is, deducing structural information from properties. To demonstrate the power of the neural network, we focus on bond breaking in mass-spectrometry, combining experimental data with HPC computational chemistry data. Funded by a Lab Directed Research and Development (LDRD) grant. Contact: Bert de Jong (de Jong on the Web)

Learning Continuous Models for Continuous Physics

Machine learning approaches to model dynamical systems are typically trained on discrete training data, using ML methods that are not aware of the underlying continuity properties. This can result in ML models that are unable to capture the continuous dynamics of a system of interest, resulting in poor accuracy outside of the training data. To address this challenge, we develop a convergence test based on numerical analysis methods to validate whether a neural network has correctly learned the underlying continuous dynamics. ML models that pass this test are able to better extrapolate and interpolate on a number of different dynamical systems prediction tasks. Contacts: Aditi Krishnapriyan, Michael Mahoney

Learning Differentiable Solvers for Systems with Hard Constraints

Machine learning has become a more commonly used approach to model physical systems. However, many challenges remain, as incorporating physical information during the machine learning process can often lead to difficult optimization. We design a differentiable neural network layer that is able to enforce physical laws exactly and demonstrate that it can solve many problem instances of parameterized partial differential equations (PDEs) efficiently and accurately. Contacts: Aditi Krishnapriyan, Michael Mahoney

News

New Encoder-Decoder Overcomes Limitations in Scientific Machine Learning

A team of Berkeley Lab computational researchers introduce a new Python-based encoder-decoder framework for complex scientific datasets that overcomes deep learning semantic segmentation issues and widespread adaptability problems. Read More »

Instagram

Instagram YouTube

YouTube