Active Learning

Active learning is a part of machine learning where a learning algorithm can query a user interactively to label data. At Berkeley Lab, active learning arises most naturally in the design of optimal experiments, on instruments or computers. For example, given an experimental setup, what data should be collected for optimal learning? In many cases, these active learning methods can be extracted directly from stochastic-process-driven uncertainty quantification, e.g., placing probability density functions on a set of function values, in which case conditioning on the observations leads to a full picture of uncertainties across the domain.

Berkeley Lab researchers are using active learning to help steer scientific instruments at large experimental facilities around the world. For example, in sensor networks, our scientists collect information from the environment; and in our projects on self-driving 5G, we have highly dynamic environments in which reinforcement needs to update its models as the environment changes. With x-ray synchrotron radiation, neutron, and microscopy facilities often investigate material properties as a function of some underlying parameters. Overall, autonomous experimentation is enabling expensive experimental facilities and their operators to make scientific discoveries that were thought to be impossible just a few years ago.

Projects

Large-scale, Self-driving 5G Network for Science

The nation’s emerging fifth-generation (5G) network is significantly faster than previous network generations and has the potential to improve connectivity across the scientific infrastructure. Potential applications include linking remote experimental facilities and distributed sensing instrumentation with supercomputing resources to facilitate transporting and managing the huge volume of data generated by today’s scientific experiments. This project uses artificial intelligence (AI) combined with network virtualization to support complex end-to-end network connectivity – from edge 5G sensors to supercomputing facilities like the National Energy Research Scientific Computing Center (NERSC). Contact: Mariam Kiran (Kiran on the Web)

gpCAM for Domain-Aware Autonomous Experimentation

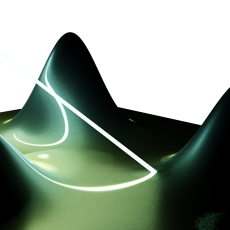

The gpCAM project consists of an API and software designed to make autonomous data acquisition and analysis for experiments and simulations faster, simpler, and more widely available by leveraging active learning. The tool is based on a flexible and powerful Gaussian process regression at the core, which proves the ability to compute surrogate models and associated uncertainties. The flexibility and agnosticism stem from the modular design of gpCAM, which allows the user to implement and import their own Python functions to customize and control almost every aspect of the software. That makes it possible to easily tune the algorithm to account for various kinds of physics and other domain knowledge and constraints and to identify and find interesting features and function characteristics. A specialized function optimizer in gpCAM can take advantage of high performance computing (HPC) architectures for fast analysis time and reactive autonomous data acquisition. Contact: Marcus Noack

News

Berkeley Lab’s CAMERA Leads International Effort on Autonomous Scientific Discoveries

To make full use of modern instruments and facilities, researchers need new ways to decrease the amount of data required for scientific discovery and address data acquisition rates humans can no longer keep pace with. Read More »

Autonomous Discovery: What’s Next in Data Collection for Experimental Research

A Q&A with CAMERA's Marcus Noack on this emerging data acquisition approach and a related virtual workshop for users from multiple research areas. Read More »

Instagram

Instagram YouTube

YouTube